RENDERMAN

Description

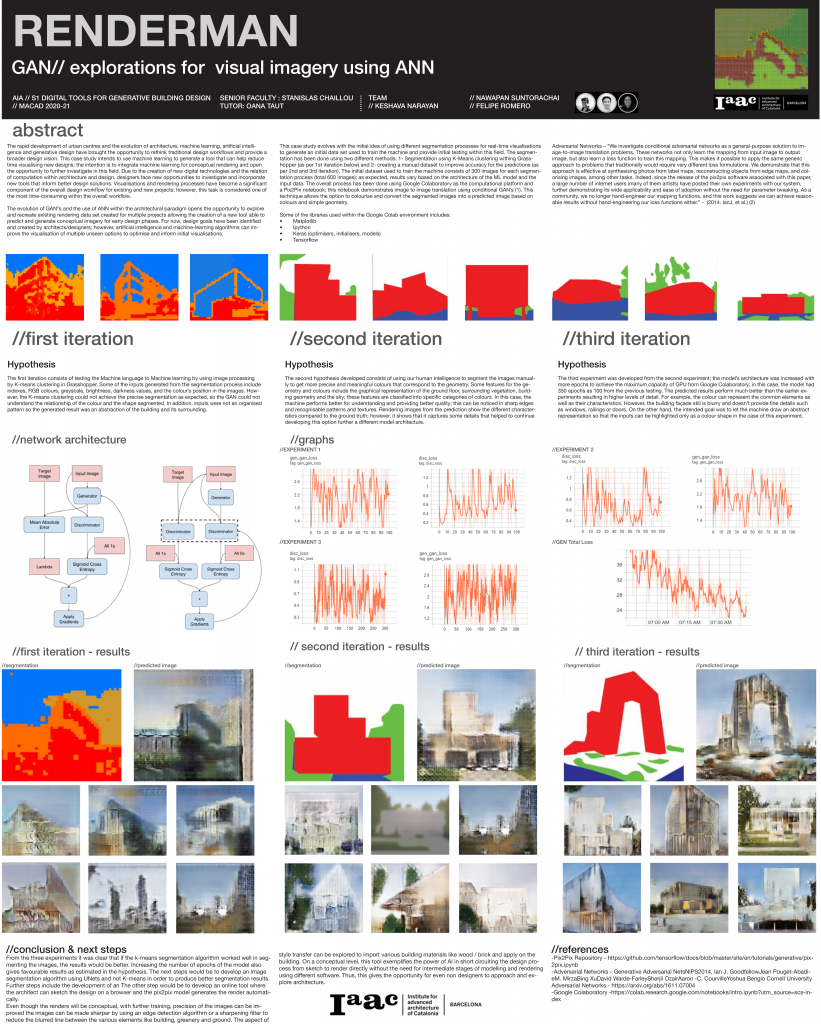

The rapid development of urban centers and the evolution of architecture, machine learning, artificial intelligence and generative design have brought the opportunity to rethink traditional design workflows and provide a broader design vision. This case study intends to use machine learning to generate a tool that can help reduce time visualizing new designs; the intention is to integrate machine learning for conceptual rendering and open the opportunity to further investigate in this field. Due to the creation of new digital technologies and the relation of computation within architecture and design, designers face new opportunities to investigate and incorporate new tools that inform better design solutions. Visualizations and rendering processes have become a significant component of the overall design workflow for existing and new projects; however, this task is considered one of the most time-consuming within the overall workflow. The evolution of GAN’’s and the use of ANN within the architectural paradigm opens the opportunity to explore and recreate existing rendering datasets created for multiple projects allowing the creation of a new tool able to predict and generate conceptual imagery for early design phases. For now, design goals have been identified and created by architects/designers; however, artificial intelligence and machine-learning algorithms can improve the visualization of multiple unseen options to optimize and inform initial visualizations. This case study evolves with the initial idea of using different segmentation processes for real-time visualizations to generate an initial data set used to train the machine and provide initial testing within this field. The segmentation has been done using two different methods: 1- Segmentation using K-Means clustering within Grasshopper (as per 1st iteration below) and 2- creating a manual dataset to improve accuracy for the predictions (as per 2nd and 3rd iteration). The initial dataset used to train the machine consists of 300 images for each segmentation process (total 600 images); as expected, results vary based on the architecture of the ML model and the input data. The overall process has been done using Google Collaboratory as the computational platform and a Pix2Pix notebook; this notebook demonstrates image to image translation using conditional GAN’s (1). This technique allows the option to colorize and convert the segmented images into a predicted image based on colors and simple geometry.

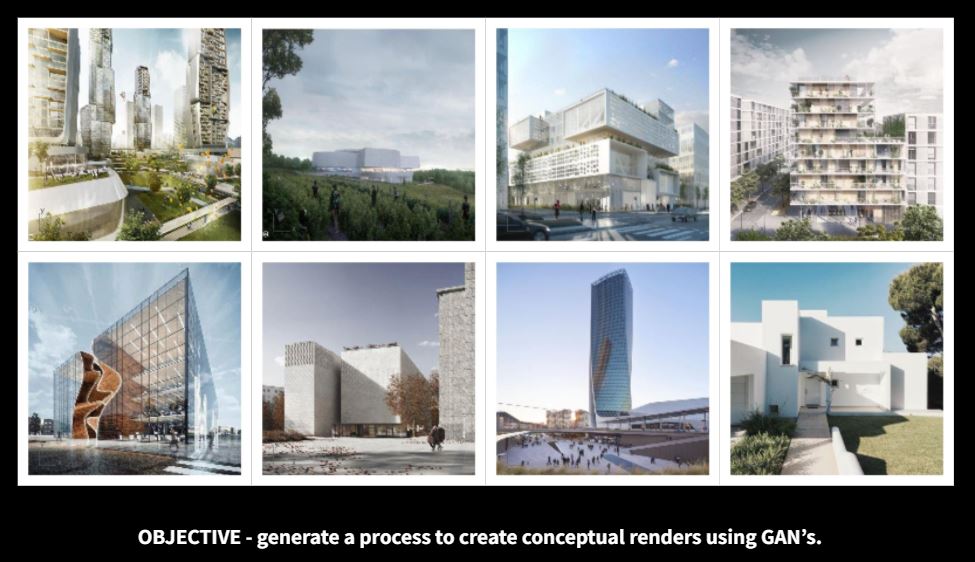

Concept Proposal

The project aims to produce rendering images based on the sketch that RENDERMAN can play a role as a visualizer to help reduce time consumption for general rendering process.

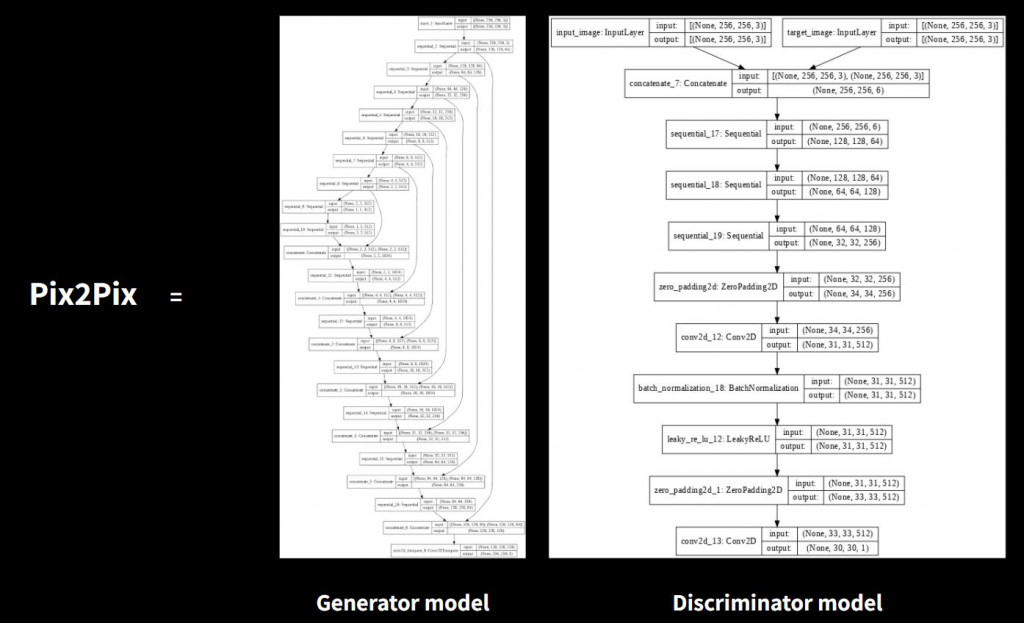

GAN – Pix2Pix Architecture

For our experiment we had used the Generative Adversarial Network, Pix2Pix to train our dataset for predict visualization. The Pix2Pix contain 2 main models architecture of Generator and Discriminator model.

Dataset

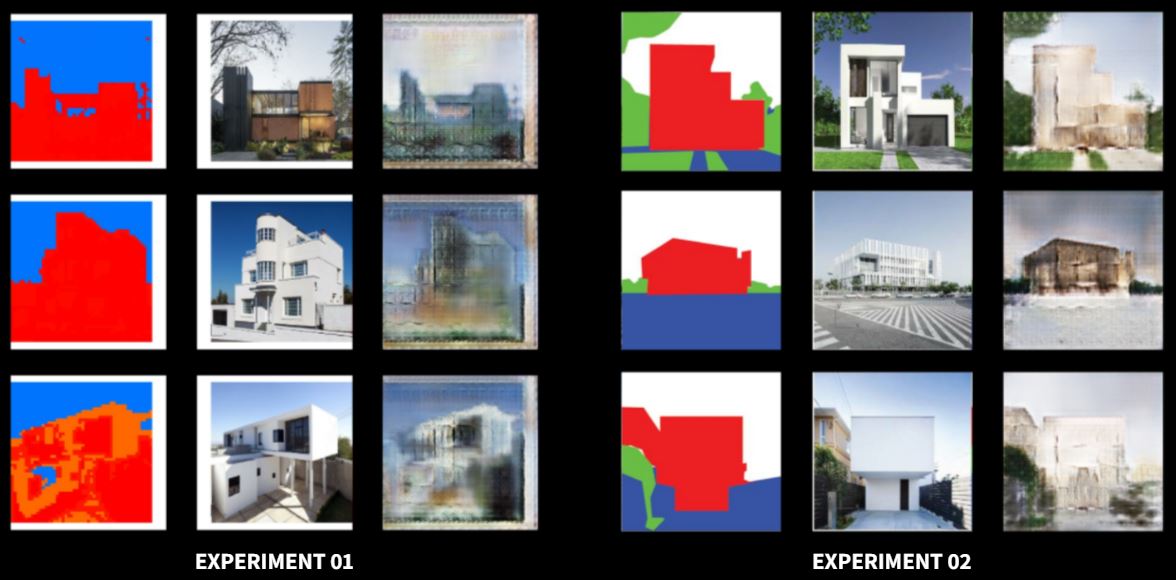

There are 3 experiments that we have tested based on the quality of sketches, colors, and style of input images.

Results

Base on these inputs, we have found that the sharper edge and coloring in a specific representative could bring better accuracy to recognize the image as a building. At this point. So this two experiment could help us develop further for the next experiment to get closer to the RENDERMAN proposal.

Final Experiment

In the end, we have achieve our best goal with the limitation of tools. We have use 400 epochs which equal to maximum GPU capacity that we could train with approximately 4.30 hours for the training process. The above images show the results that we believe if we train the tools futher, we could get much better result of the prediction.

RENDERMAN. Building visualization from sketches is a project of IAAC, Institute for Advanced Architecture of Catalonia developed in the Master in Advanced Computation for Architecture & Design – MaCAD, module Artificial Intelligence in Architecture 2020/21 by Students: Felipe Romeo, Keshava Narayan Karthikeyan, Nawapan Suntorachai and Faculty:Stanislas Chaillou and Oana Taut