Somatic Instrument meets Musical Machine

A multi-platform project linking full-body user gestures in virtual reality to the playing of a tiny toy piano by a six-axis robotic arm.

The project can be considered in two halves.

Front end

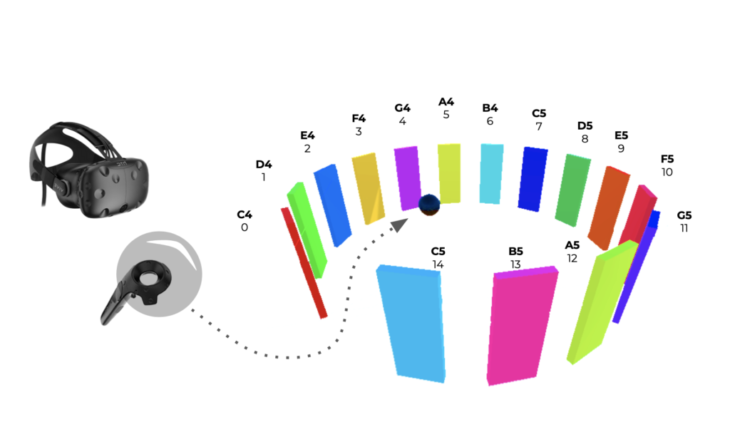

The side of the project with which the user interacts. Wearing an HTC Vive headset, the user encounters a giant arc of coloured, interactive surfaces in a virtual space. Holding the HTC Vive controllers, the user beats the surfaces in an order of their choosing. Upon being struck, each surface illuminates and a note is heard. The order of the surfaces from one end of the arc to the other produce the notes of a span of two octaves.

The pattern of the user’s intersection with the surfaces is logged by the interface and sent to the project back end via an ngrok websocket.

Back end

The sequence of notes struck by the user is sent as a string of numbers to Grasshopper, where the numbers are converted into coordinates that correspond with a digital model of the tint piano, which corresponds with the position of a real-life tiny piano. With a delicately-pointed end effector, the robot plays the seequence of notes out loud.

Platform for user interaction

The drone cage area in IAAC was modelled, in Rhino, to create synchronicity between the physical and digital spaces for interaction. Fifteen large rectangular surfaces were created and arranged in a circular arc. These would symbolise the piano keys across two octaves. Each key was given a small depth, as the volume would facilitate a neater interaction mechanism once in the virtual space.

The model was exported from Rhino as .fbx, and imported into the Unity project. The Unity project had been prepared with Steam VR, ROS (although this would not be used), and ngrok.

In Unity, a sphere object was created and placed in the middle of the arc of keys. The object represented the HTC Vive controller, and allowed us to to program and test the interactions directly from our laptops, without having to use the headset until a later stage of the project. It would later be replaced by an invisible sphere object assigned to the controller.

Rhino models import with the viewport information contained, which appears in Unity as a number of cameras. It’s necessary to delete these to leave the single main camera, as the user should only have a single viewpoint into the virtual space.

Two C# scripts were created.

The first script, DetectCollision.cs, specified that when a sphere object collided with a piano key, its material should change, it should play the associated audio source, and the assigned note should be added to the ‘NoteManager’.

The second script, NoteManager.cs, collected the list of assigned notes and sent them via websocket, using the reverse proxy ngrok, to the robot side of the project. This script was assigned to the trigger on controller.

For each of the virtual piano keys, the following programming was undertaken:

- Assign material (colour hexadecimal)

- Assign trigger material (hexadecimal + Emission)

- Assign audio source (CDEFGAB x 2 octaves, .mp3)

- Assign note (number 0-14)

- Assign DetectCollision.cs, to activate the characteristics above in the interactive manner specified by the script

Simulations were performed to check the interactions. Once satified with the effects, an invisible sphere object was created, assigned to the controller, and the test sphere deleted. Now, when the controller collided with the keys, the actions of DetectCollision.cs would be performed.

After the notes had been played in the order they wanted, the user would then press the trigger to send the musical composition to be performed by the robot arm.

Platform for robotic performance

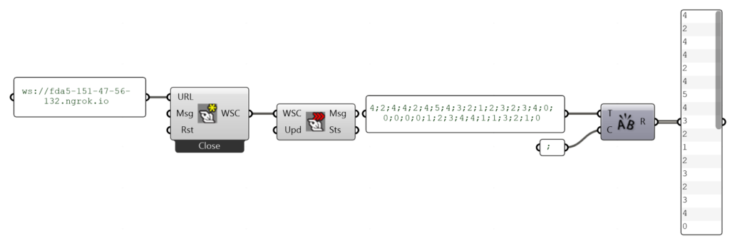

The key IDs were sent from Unity through websocket as a string format with semicolons as delimiters to separate the IDs in the form of:

1;4;2;3;4;5;4...

They were received into grasshopper and parsed into a list of IDs. Each ID was assigned a key on the piano as a plane which corresponded to that key’s physical location.

Approach and retract planes were added after each key. Those planes became the toolpath for the robot to be able to translate movements in the VR space into physical movements for the UR robot.

Those planes were translated into URscript using the COMPAS FAB framework and sent to the robot over direct ethernet connection.

Robtic Body Beats is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at Masters in Robotics and Advanced Construction, in 2021/2022 by: Students: Alfred Bowles, Grace Boyle, Andrea Nájera, Ipek Attaroglu Faculty: Starsk Lara Assistant faculty: Daniil Koshelyuk