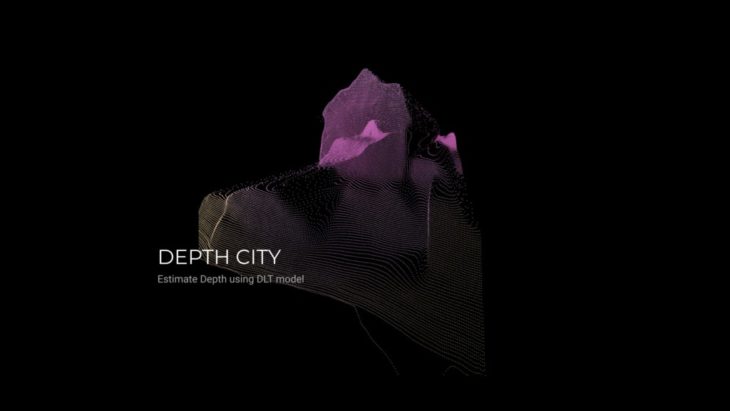

DEPTH CITY

Estimate Depth using DLT model

Design Intention

To create 3D space from 2D image

Method:

Depth estimation is a crucial step toward inferring scene geometry from 2D images. The goal of monocular depth estimation is to predict the depth value of each pixel or infer depth information, given only a single RGB image as input.

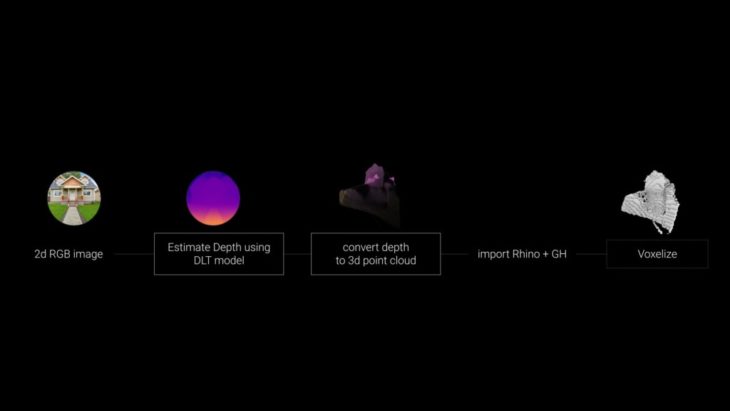

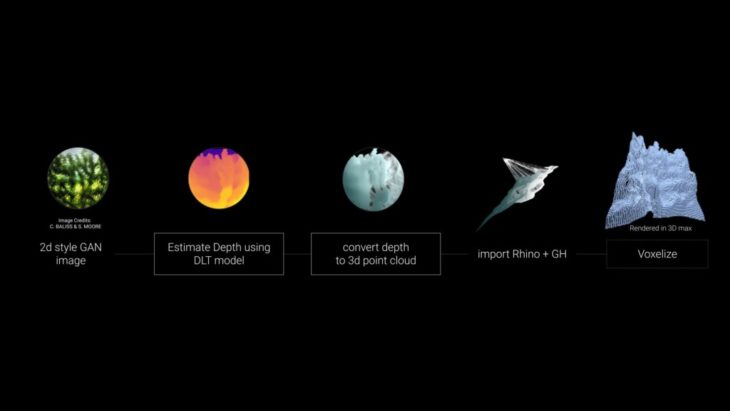

WORKFLOW

Mode Dataset

Various datasets containing depth information are not compatible in terms of scale and bias. This is due to the diversity of measuring tools, including stereo cameras, laser scanners, and light sensors. Midas introduces a new loss function that absorbs these diversities, thereby eliminating compatibility issues and allowing multiple data sets to be used for training simultaneously.

WORKFLOW I

We start from a 2d image a run estimating Depth using the DLT model, we convert it into a 3D points cloud, import it into rhino and Grasshopper, then voxelized it over there.

The Model

The following model is based on MiDAS-DPT Hybrid whose backbone is Vision Transforms.

To understand this process, Various datasets containing depth information are not compatible in terms of scale and bias because of measuring tools, like stereo cameras and light sensors.

Midas introduces a new loss function that absorbs the diversities allowing train multiple datasets simultaneously, So very brief Midas is a machine learning model that estimates depth from an arbitrary input image.

2D RGB IMAGES

Dataset

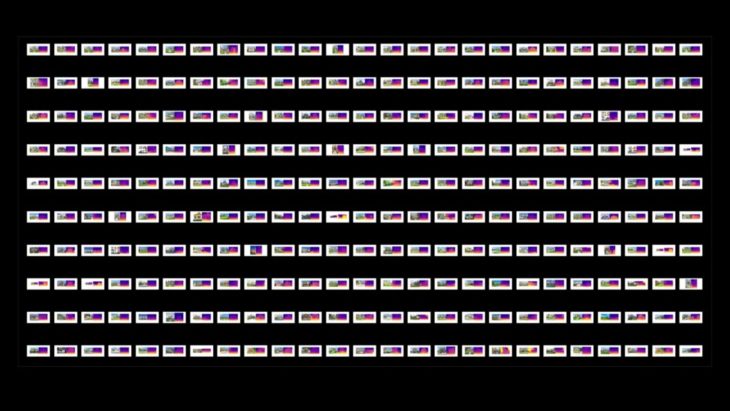

The first exploration is based on 250 3d RGB images in other words monocular images, downloaded from google.

2D RGB IMAGES

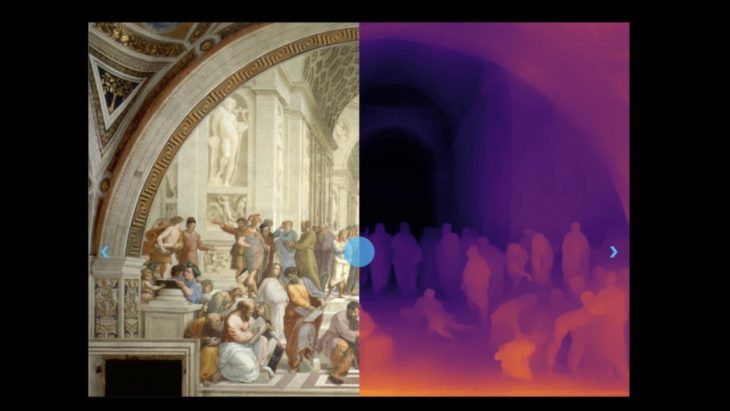

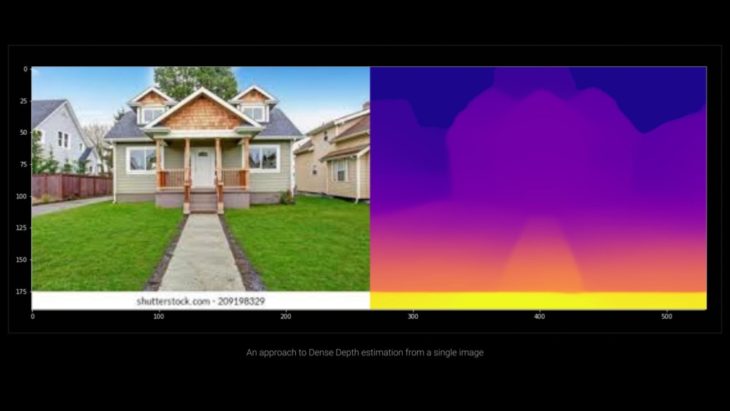

Side-by-side comparison of image and depth map

Then we process the images to get the depth estimation and prepare the data

to recreate a point cloud.

Here is an approach to Depth estimation from our dataset before converting

into points cloud.

Depth to Point Cloud

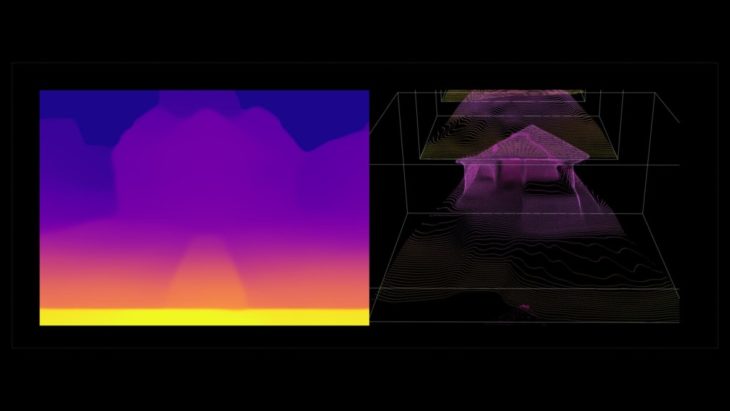

Side-by-side comparison of image and depth map

Then here we can visualize the following step which recreates a points cloud based on the depth estimation.

Rhino & GH

The process to recreate a pc is not straightforward. We scaled the point cloud and use a 64×64 grid which to find the closest point that refines the resolution of the pc.

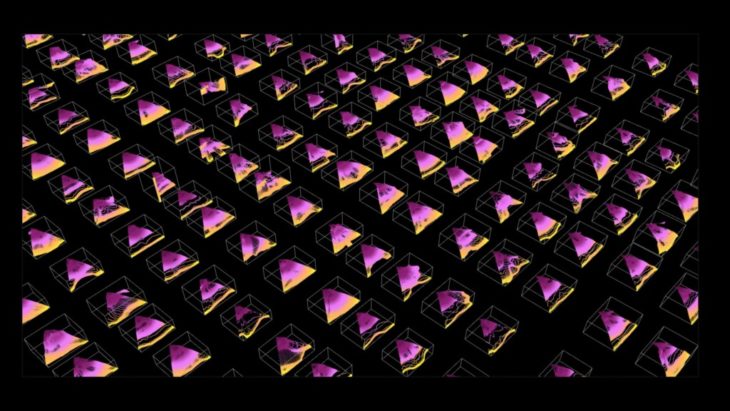

A visualization from the entire data set one step early from the resolution post-processing.

The final step of this exploration is to recreate a voxel from the depth estimation map.

WORKFLOW II

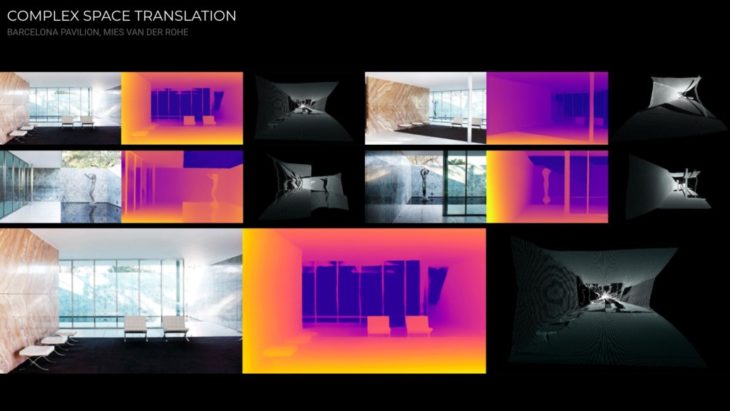

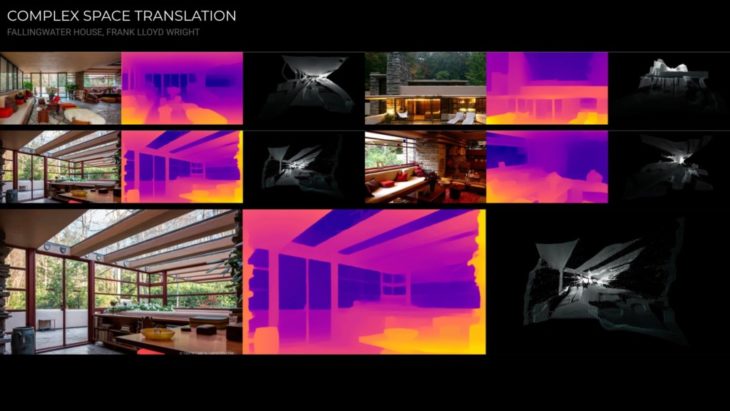

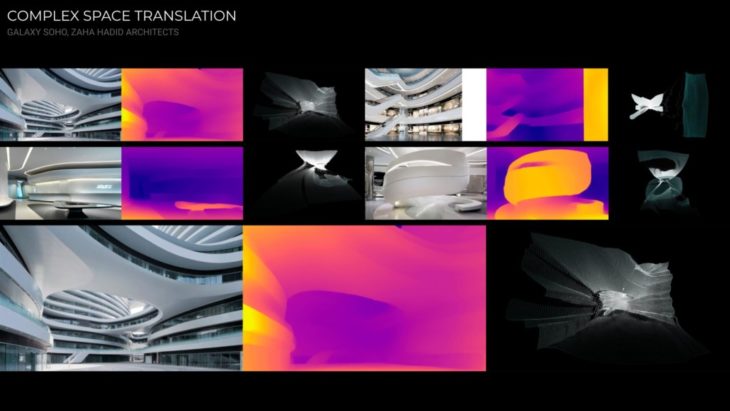

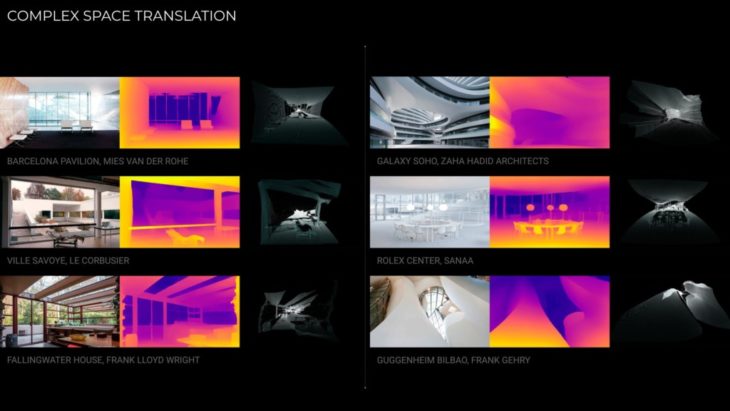

DEPTH ESTIMATION FOR COMPLEX SPACE TRANSLATION

In this workflow, we started with 2d images of various styles of famous architects worldwide. we also run estimating Depth using the DLT model, then convert it into a 3D points cloud.

COMPLEX SPACE TRANSLATION

Here is a selection of famous architectural styles

BARCELONA PAVILION, MIES VAN DER ROHE

FALLINGWATER HOUSE, FRANK LLOYD WRIGHT

VILLE SAVOYE, LE CORBUSIER

ROLEX CENTER, SANAA

GUGGENHEIM BILBAO, FRANK GEHRY

GALAXY SOHO, ZAHA HADID ARCHITECTS

COMPLEX SPACE TRANSLATION

WORKFLOW III

DEPTH ESTIMATION FROM 2D STYLE GAN

2D STYLE GAN Credits: CHARBEL BALISS AND SOPHIE MOORE

In this workflow we follow the same process as the first workflow except instead of any 2D images, we would use a 2D style GAN image generated from another model.

2D GAN

2D STYLE GAN Credits: CHARBEL BALISS AND SOPHIE MOORE

2D GAN

DEPTH ESTIMATION FROM 2D STYLE GAN

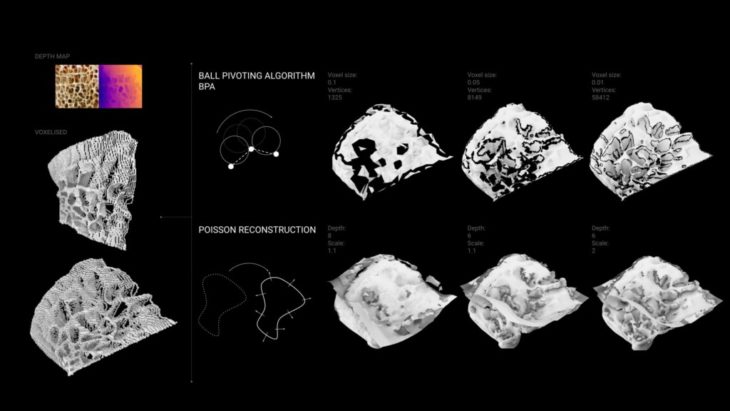

MESHING ATTEMPTS

Further Steps

- Create our own dataset to train a 3d GAN, to recreate and visualize our results in the Latent Space

- Refine the depth estimation values for the point cloud

- Testing with a model that can create a mesh for the point cloud

- Mixing two 2D GANs and Depth Estimation workflow can help in the initial early design stages for more creative and unexpected results.

Depth City is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at MaCAD (Masters in Advanced Computation for Architecture & Design) in 2022 by Salvador Calgua, Pablo Antuña Molina, Jumana Hamdani, and faculty: Oana Taut, Aleksander Mastalski.