Aiming to develop an AR office assistant, the project is centered around exploring core notions about the future development of workspaces, what being a digital nomad means presently and how technology can better assist the users of co-working spaces.

Context

When speaking of the future of work, in a context where most existing corporate offices will likely be abandoned and traded off for more cost-efficient remote-working options, a brief analysis of existing co-working spaces is due. Looking at what characterizes workspaces, three main descriptors emerge.

Co-working spaces mean:

- Hot desks and flexibility

- Traffic and temporality

- Residents and casual users

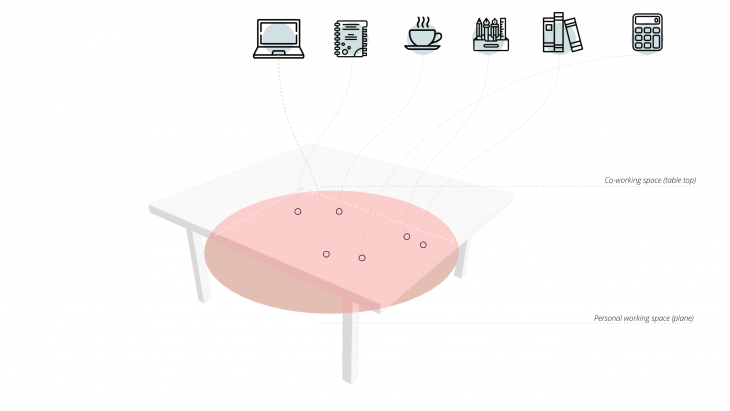

From this generalized overview of the co-working phenomena, a brief understanding of what an office assistant must do emerged. The learnings drawn are that a digital assistant must encourage a quick settlement into the user’s working habits, regardless of whether the user is a regular user of a co-space or a casual one, it must understand the worker’s habits in regards to the space they occupy, it must be able to predict and assist one’s activities as well as minding concepts of pleasant space-sharing with other independent workers.

Project in Focus

When discussing assistants, one can envision multiple scales of representation: its activity could become beneficial on the personal, individual scale or on the social scale, acting as a digital connection point between physical work practices. In this instance, constrained by Covid-19 limitations, the project is focusing on developing a virtual assistant for individual users, with the use of immediately available technology, such as one’s phone and AR app development toolkits.

Within this aim, the project focuses on:

- Memorizing: user habits and work patterns

- Projecting: customized workspaces

- Syncinc: calendar and tools to assist one’s work-session.

Development

The first part of the project concerns experimenting with data recording tools in order to extract work patterns; the second part of the project concerns the development of the AR app assistant for Android. Both parts contribute towards representing the project concept: building an AI assistant via using digital tools only, such as AR Core.

Concept: digital AI assistant in AR Core

Workflow

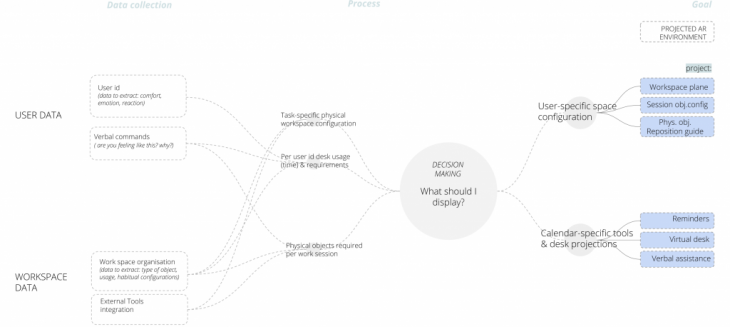

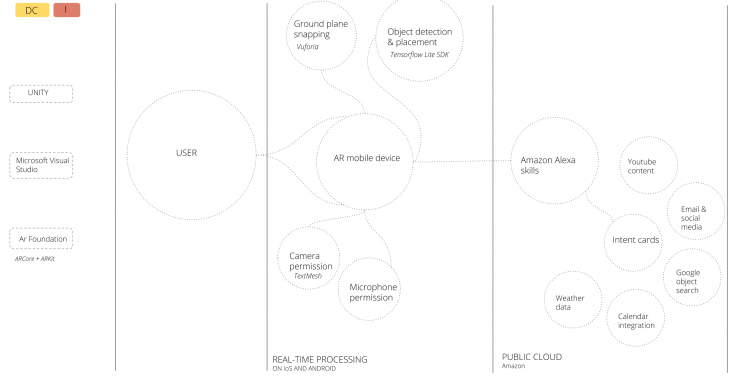

The general workflow goes from collect user and workspace data, to processing it in order to extract information such as object placement within one’s workspace, time for which each desk object has been used etc., then going through a decision making process as to what the AR assistant should display, based on a combination of machine learning and direct user input via voice control.

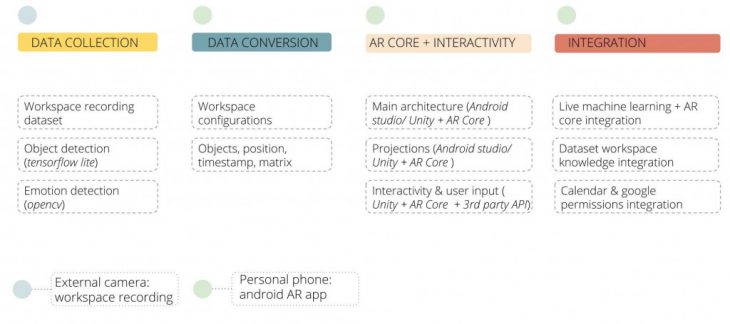

Therefore, four main lines of initial exploration are being set for the current project: data collection, data conversion, AR Core development and integration of the existing modules. With the purpose of creating a tool that can be used by anyone, the only equipment the project sets out to use are an external camera (phone or other recording device) for the initial data collection purposes and one’s personal phone, to later run the AR app.

1: Data collection

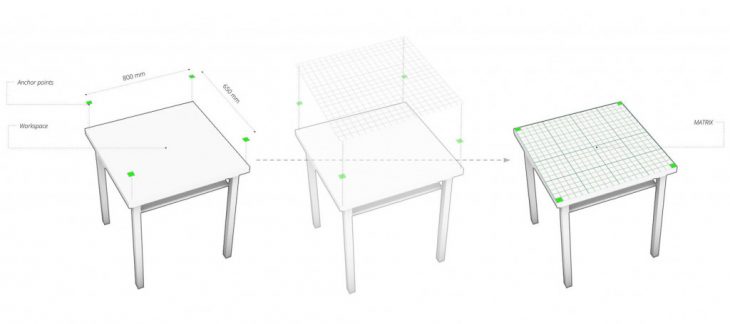

For data collection, the workspace is first defined as an area on a table surface, where different day-to-day objects can be recognized.

Four green markers are placed on the data collection set-up to delimit the workspace area of a user.

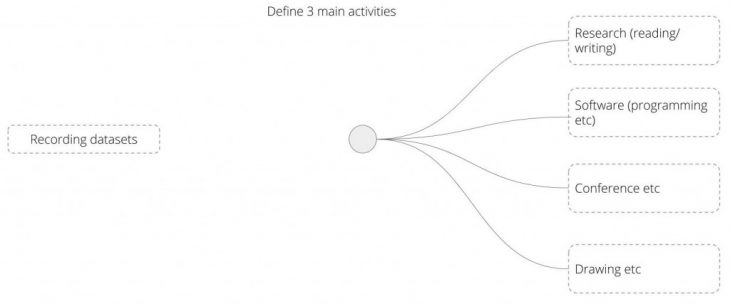

A series of test workspace activities are being defined, in order to later associate the workspace configuration to the designed working activities- such as research work, programming, attending online meetings, drawing etc.

A series of test workspace activities are being defined, in order to later associate the workspace configuration to the designed working activities- such as research work, programming, attending online meetings, drawing etc.

Datasets are being recorded for each of the designed categories above:

2: Data conversion

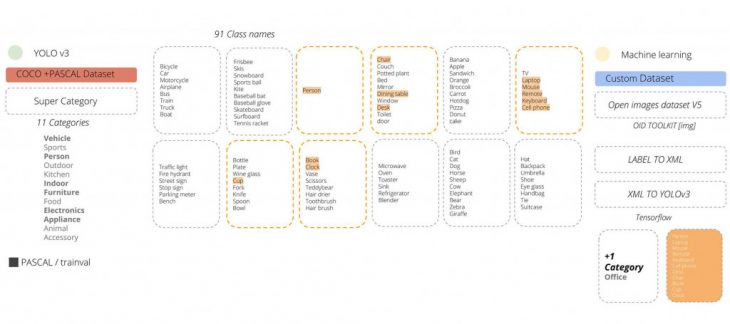

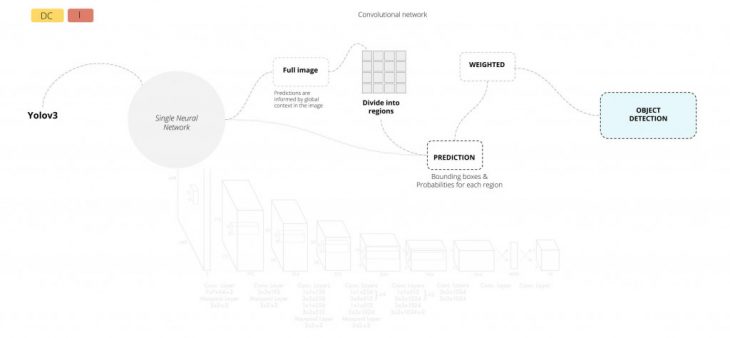

For the purpose of extracting information from the collated datasets, yolo object detection is used, trained to recognize object classes from the office realm.

The Yolov2 object detection workflow:

The Yolov2 object detection workflow:

Object detection results:

Object detection results:

*other data collection experiments performed in the process:

/optical flow has been explored as an option to better extract and track the location of objects within the assigned workspace

/canny edge detection & optical flow combined:

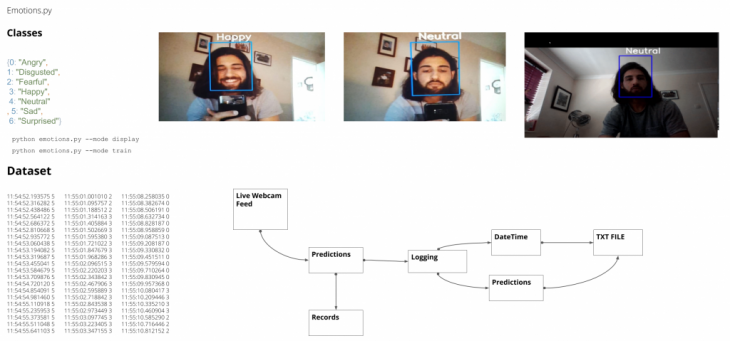

/emotion detection (*proved to not be user friendly)

3: AR core

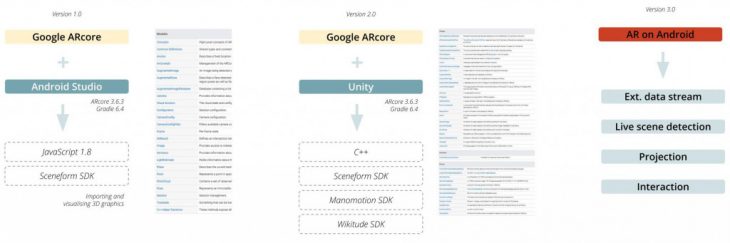

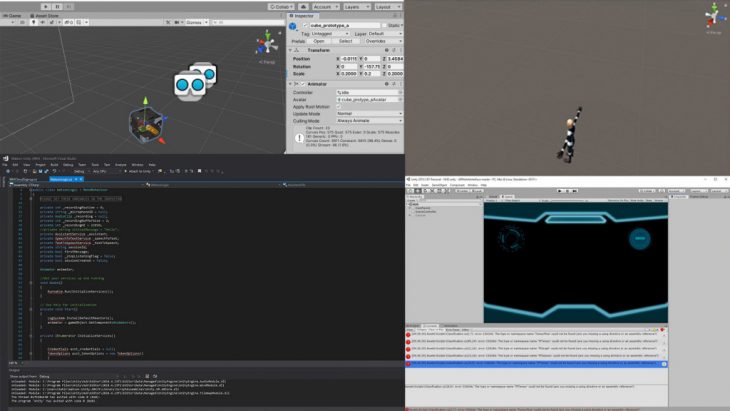

Developing the AR core app, multiple tracks of experimentation have been tested. First, AR Core was ran on Android Studio; second on Unity. The difference between the two- besides the scripting language going from Java on Android to C++ in Unity- is the significant database of SDKs available on each development platform. Whilst on Android Studio a limited number of AR Core features are available, there are plenty of SDKs and functions available for integration in Unity.

/ AR Core in Android studio, first tests results:

</p>

/ AR Core in Unity, secondary tests:

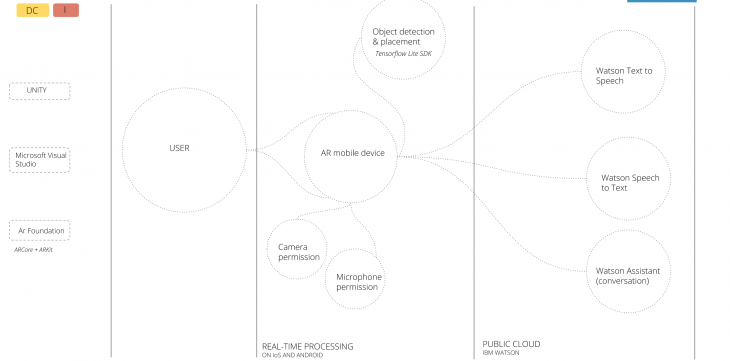

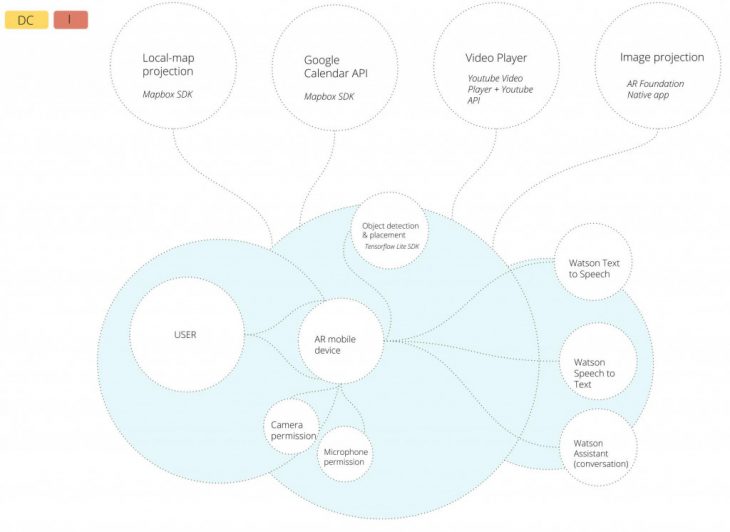

The first development strategy tackled in Unity consists of attempting to develop the voice command functionalities in order for a user to be able to communicate with the AR assistant represented by an animated character. Therefore, the workflow that follows connects the User to tbe Public cloud via the AR mobile device (with camera and microphone permissions); IBM cloud is being used to create a Watson assistant, as well as using the text to speech and speech to text IBM services.

IBM Cloud detailed workflow & possible integrated functionality:

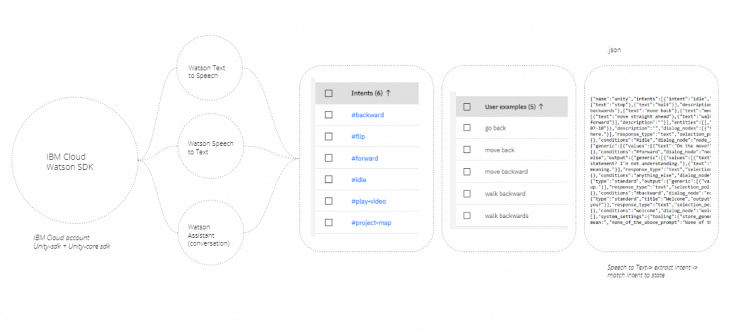

IBM Cloud training the assistant to recognize intent from the User’s voice and convert it to Unity animation commands:

Watson assistant tests and IBM cloud responsiveness issues:

Watson assistant tests and IBM cloud responsiveness issues:

Alternative Unity workflow using Alexa skills:

</p>

Conclusions

The current project is presented as a broad exploration into what the future of work might mean and the technological developments that could be integrated into creating new solutions for the digital nomads of tomorrow. From this exploitative basis, more compact solutions can emerge from a trimming and rationalization of all these tools into elegant solutions that can help collect and display data about a user’s habits in a decentralized manner, allowing the person that’s being tracked to directly access information about their work sessions.

*project developed as part of MRAC Hardware III Seminar 2019/2020 // the project and all its associated files -> click to access GitHub post

AR OFFICE ASSISTANT is a project of IaaC, Institute for Advanced Architecture of Catalonia, developed at Master in Robotics and Advanced Construction in 2019-2020

Students: Abdelrahman Koura, Abdullah Sheik, Andreea Bunica & Jun Lee

Faculty: Angel Muñoz

Faculty Assistant: Agustina Palazzo