Emotional_Trip

Introduction

Emotional Trip is a project about interaction of users with an object to trigger a change in space. The object is a light installation that is developed as a simulation in Unity.

Methodology

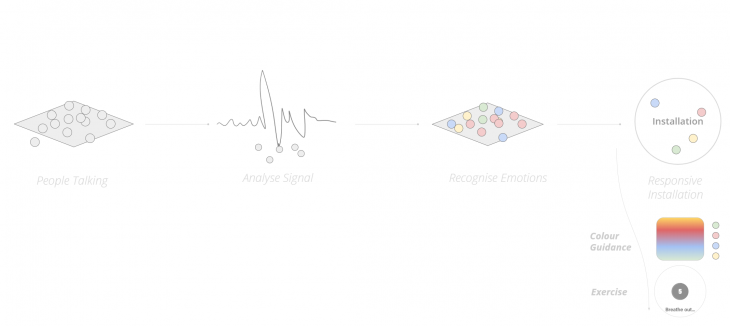

Our aim is to scale up the exercise to an interactive installation. The installation will initially monitor people talking, analyse the signal of their speech, translate it to certain emotions people would feel while they are talking and then react back by changing the colors of the light: A speech to color evaluation that would happen every 19 seconds.

Inspiration

1. Aura Installation, by Nick Verstand studio

2. We Are All Made of Light, by Maja Petric

3. Sabin, by Edith Fikes

Installation Setup

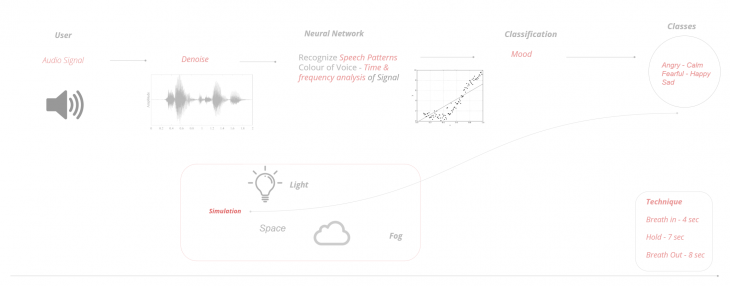

The data flow chart below is where we start by monitoring users audio signal with the use of a microphone. Next, we de-noise the signal to get rid of surrounding sounds, and then feed the signal to a neural network with the aim to recognize, analyse and relate the audio signal with the mood of each user. We then classify the different user moods to angry, calm, fearful, happy and sad and feed the text to our unity simulation.

The proposed design is an installation that its overall atmosphere would the be entirely related to how the user is feeling at the time.

Neural Network Setup

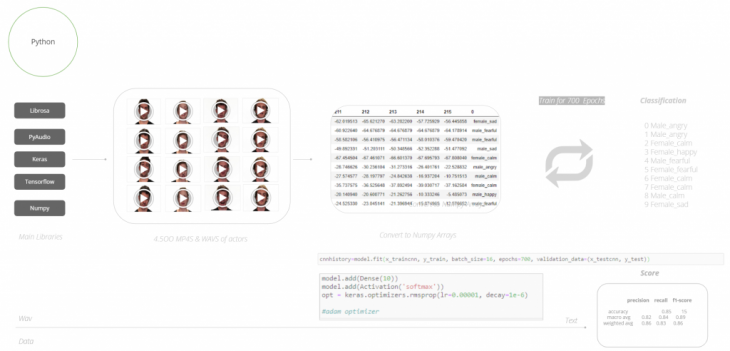

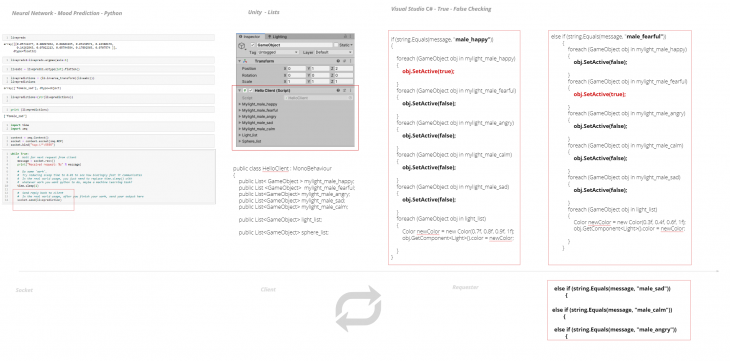

We started building our system by setting up our neural network in the first place.The code has been written in python using tensorflow and keras while the two main libraries for sounds analysis were librosa and pyaudio. The dataset we used was 4500 .wavs files and .mp4 videos from actors saying the exact same thing in a different tone of voice, describing different emotions they had at the time. The model was then trained for 700 epochs and the classification we are able to get is regarding the gender, which is not implemented in the installation colors, and the different moods of someone being angry, sad, calm, happy and fearful.

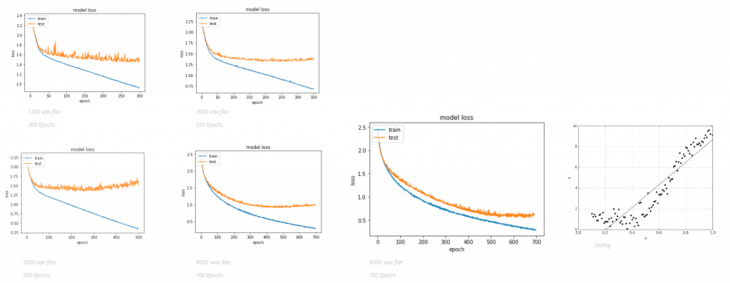

The graphs below are describing the validation on various training we tried, changing the amount of epochs, datasets, optimisers and learning rates of the model,This helped increase the accuracy of it to almost 80%.

Evaluation of the Training

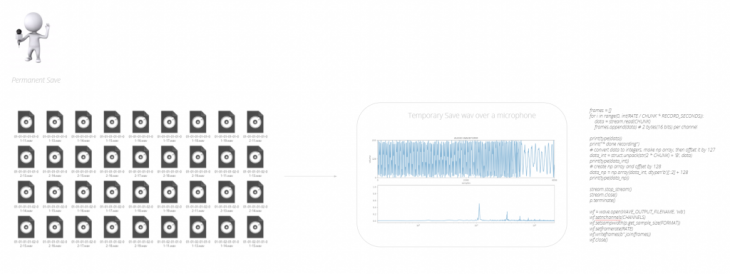

.WAV Import

Directly Through Microphone without permanent saving

Next, we focused on creating a buffer for temporary .wav file saving on the server every 20 seconds through our camera microphone. These files will then feed in the network for evaluation without going through the process of saving each time.

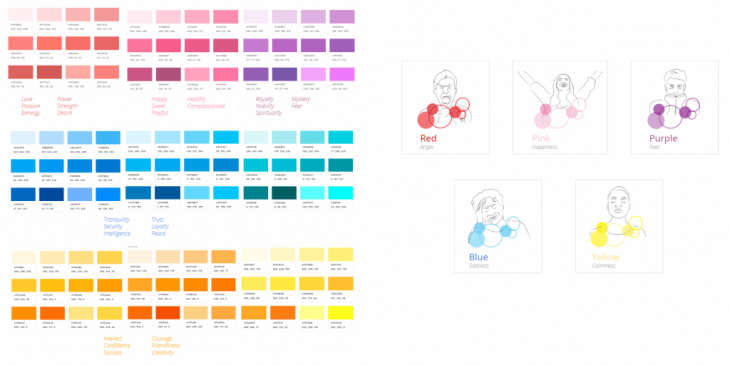

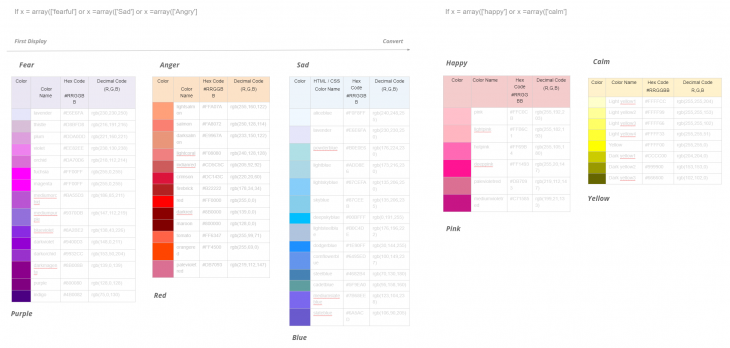

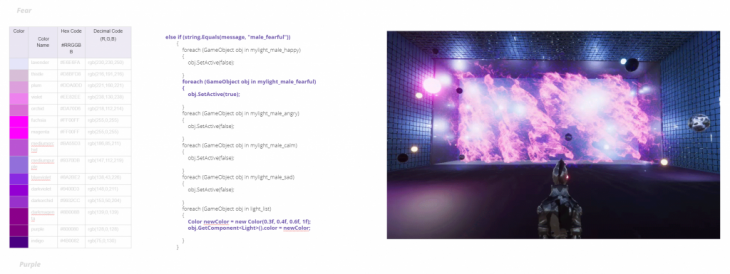

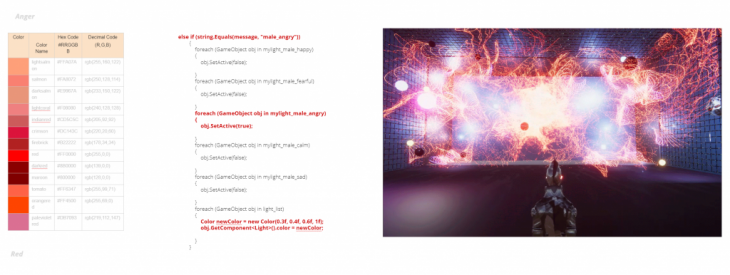

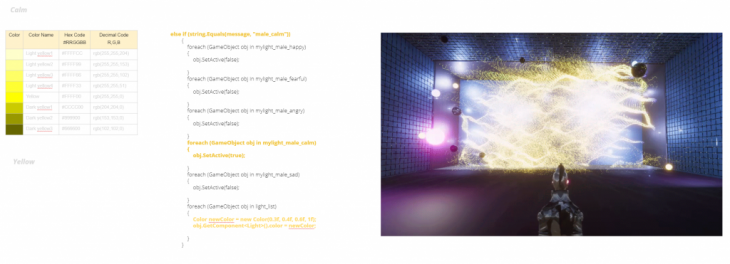

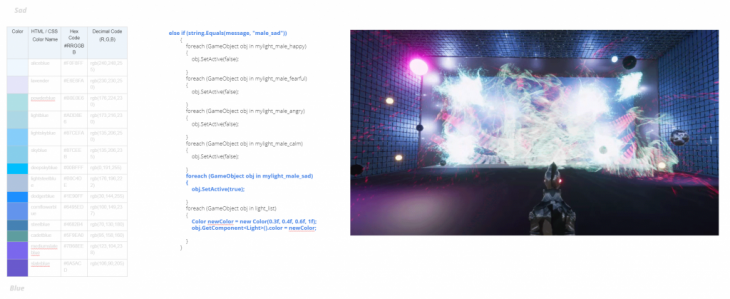

Color and Emotion

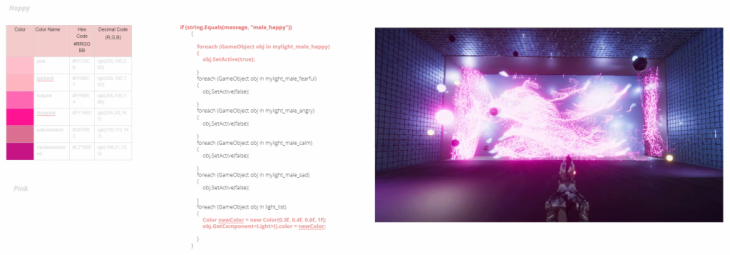

To establish a relationship between the user and the installation, we did a color study of what color would best represent the feeling result we get from the neural network. Shown in the figure below, we have a range of RGB values that we use in the network when a certain feeling is detected.

Python to Unity Communication

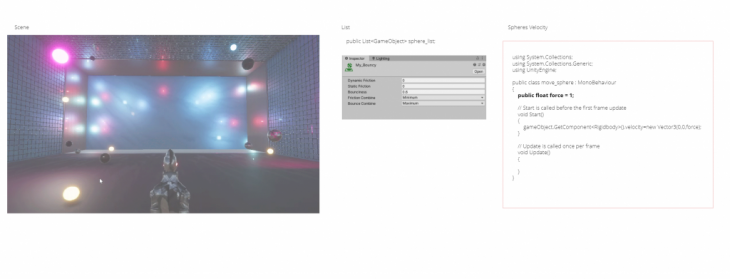

The python to unity communication is established with the use of a web socket, which publishes the outcome of the neural network into unity. In unity we then have created lists loading all the particle systems which the outcome of the network triggers every time, as well as the overall light setup which changes according to the emotions using visual studio. For the interaction part we have created an “if” statement setting true the upcoming message from the network each time for the scenery to change.

Unity Setup

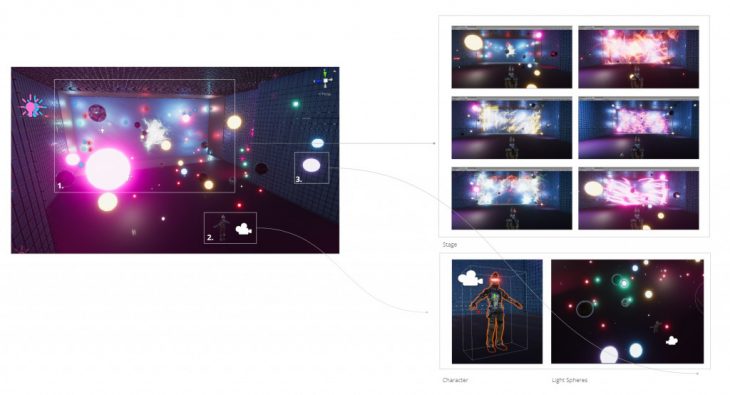

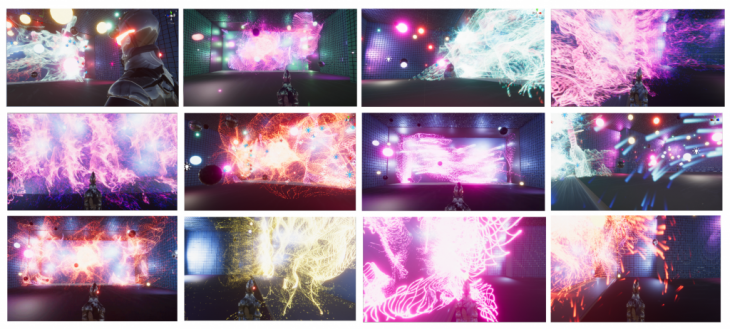

The main setup scene in Unity includes the installation, the character and the inflated colored light spheres. For the spheres we added movement by giving them an initial velocity and spring, so they move and bounce around the scene changing the light conditions of the scenery while the character/user can interact with them.

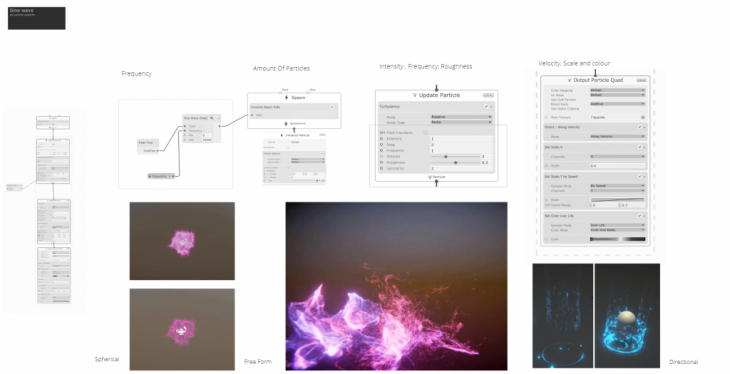

The presets for the installation have been designed using custom particle systems. Each particle system is using 70000 particles which are running from the GPU as one, which keeps it light. These VFX particle systems can be customized according to direction, density, generation and death loop time, color scale and speed.

Unity Scenes

Welcome Scene

Happy

Fearful

Angry

Calm

Sad

Real-time Testing

Light Installation

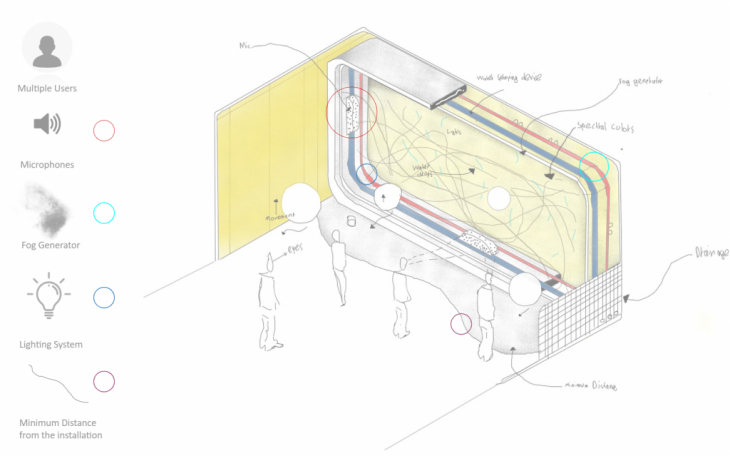

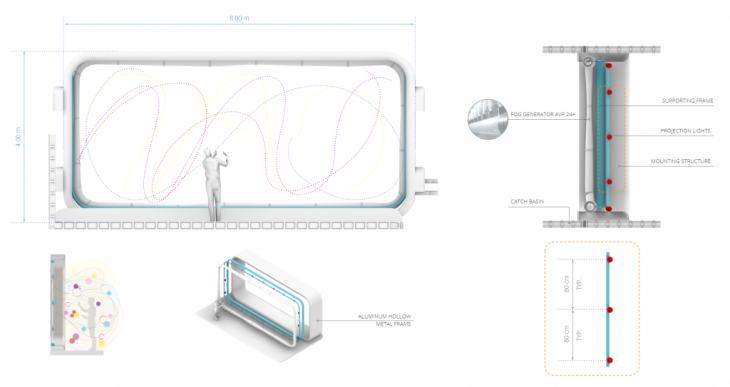

The next step is to develop a system where will help us realize the design and functionality of the installation.

This initial sketch of the overall setup shows the area of interaction with the installation. The microphones are placed at the perimeter of the frame similar to the fog generators and the lights. There is water catch basin to collect the water and that is caused by the fog generator and reuse it in the installation. The adjacent walls and the floor are finished with sound-proofing materials.

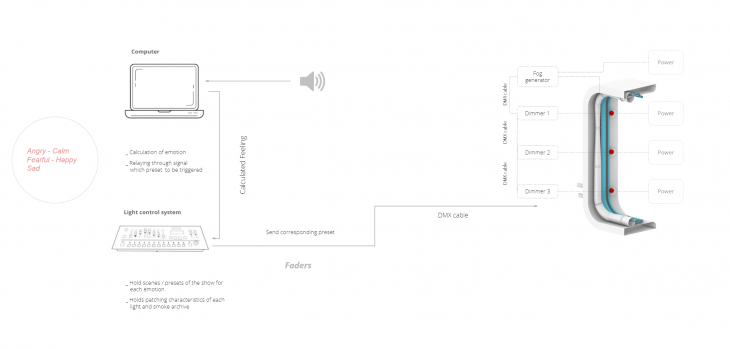

The user interacts with the installation by giving feedback to the microphone. The feedback is read by the neural network. The neural network processes the voice information and gives the signal to the light control system which holds the presets. Each preset represents a set of parameters regarding the light color/rotation/filter/strobe and pan as well as the fog generator parameters, such as speed of fog and intensity. These signals are sent through a DMX cable to the installation corresponding parts. Each part is connected to a power supply. The light are connected in series.

Views and parts of the installation

Emotional Trip is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at Master in Robotics and Advanced Construction in 2019 by:

Students: Alexandros Varvantakis, Gjeorgjia Lilo

Faculty: Angel Muñoz

Faculty Assistant: Agustina Palazzo