Robot See Robot Do // SOFTWARE III

This course was an opportunity to experiment with various machine learning tools and algorithms available in Owl. Using Owl, we learnt the Grasshopper-based workflow for neural networks (supervised learning).

After getting training on how the neural networks are generated and how NN works in grasshopper. The students were divided in groups and decided the topics to work on.

Motivation

Manipulating the Robot Using Human Gestures

The human body fosters a wealth of tacit knowledge, especially in master-builders. The precious knowledge bred by these masters’ bodies is a precious repository of profound skills and embodied know-how. Following the development of robotic fabrication, we believe that recording those nonstructural data via sensing to shift to robotic fabrication can challenge more complicated application and preserve the lost of craftsmanship.

Concept

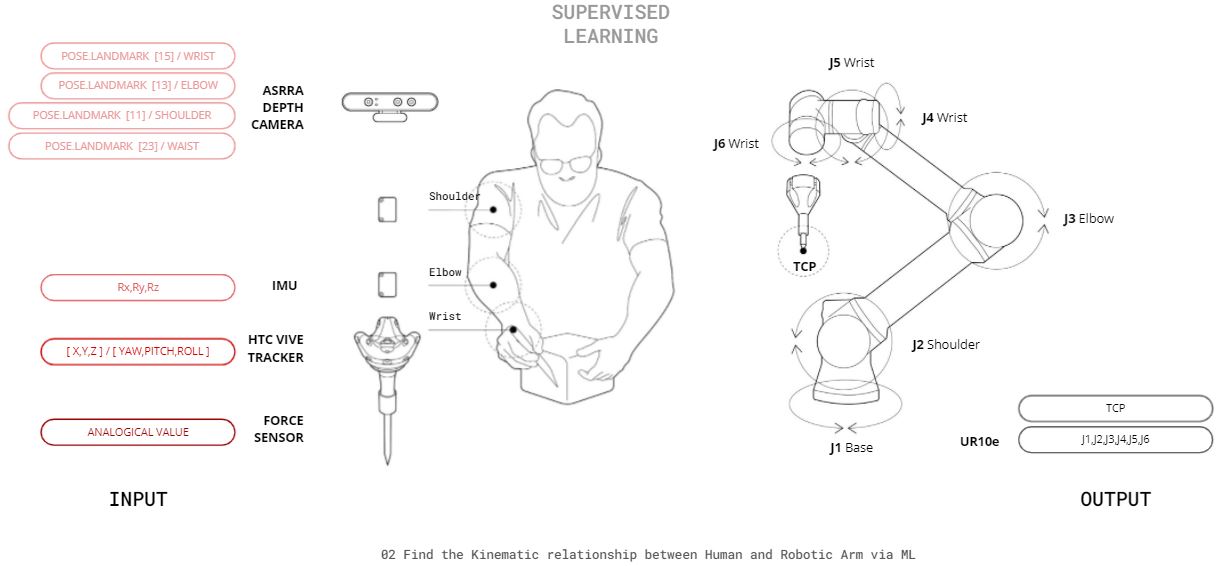

The idea was to use tools/sensors such as RGBD camera and mediapipe, HTC vive tracker, IMU and record the human motions within a workspace (human workspace). The recorded data can be used to feed in a Neural network in grasshopper with a meaningful translation of human pose with in human workspace to robots joint pose with in robot workspace.

Mediapipe is a open source trained machine learning model which can detect human body pose with use of a normal RGB camera or a RGBD camera. Human joint pose/locations can be extracted with mediapipe.

For the whole process, we decided to use clay engraving. Human participant will work on a 2d surface in human workspace and robotic arm will work in real-time on piece of clay (400 x 400 mm) in robotic workspace.

Inputs

ROS has been used to record the readings from all the sensors in a rosbag file. For human motion recording following were used for collecting inputs:

- Force sensor was used with a custom designed tools. The tool has a clay pen mounted. As the user presses the tool over the surface the force sensor inside the tool reads the reading and the reading is saved in a csv file

2. HTC vive tracker mounted on the top of the tool give the position and rotation data while the user is using the tool in the work area.

3. Custom made elbow band mounted with MPU-9250 provided rotation data of the users hand.

4. Combination of Mediapipe with Astra depth camera provided the location of wrist, elbow shoulder and waist coordinate of the user. Depth frame of RGBD camera had been superimposed over the RGB camera frame with mediapipe to achieve the depth.

Method

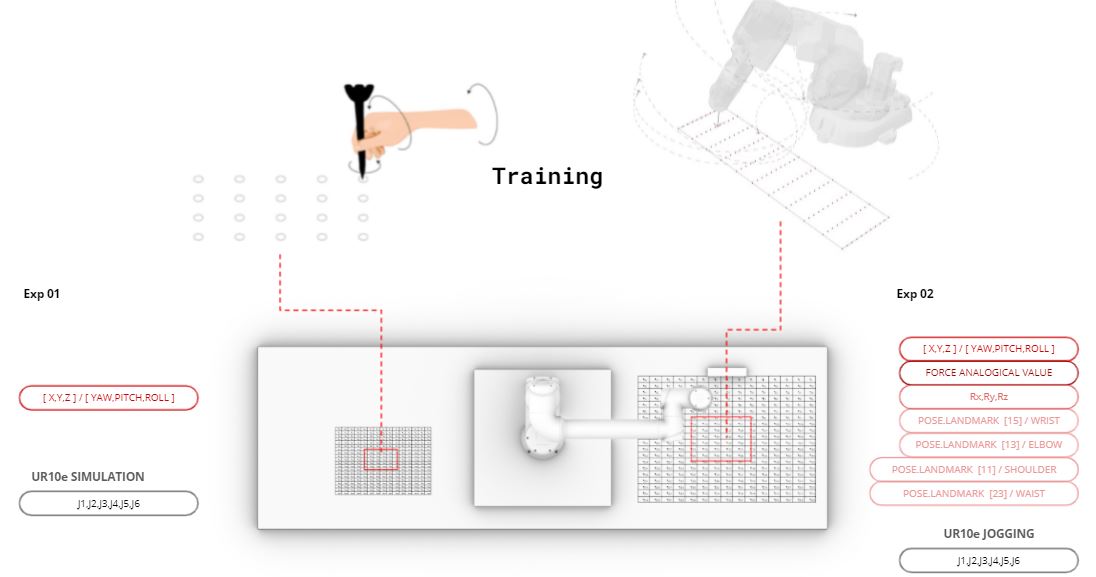

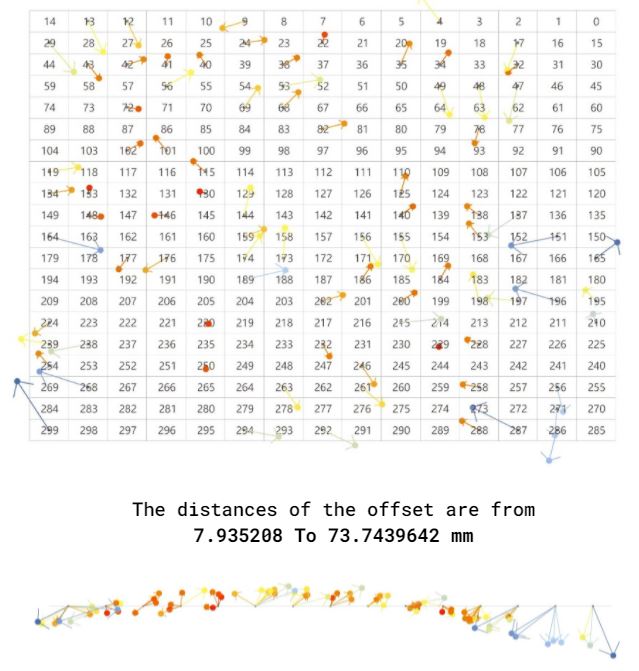

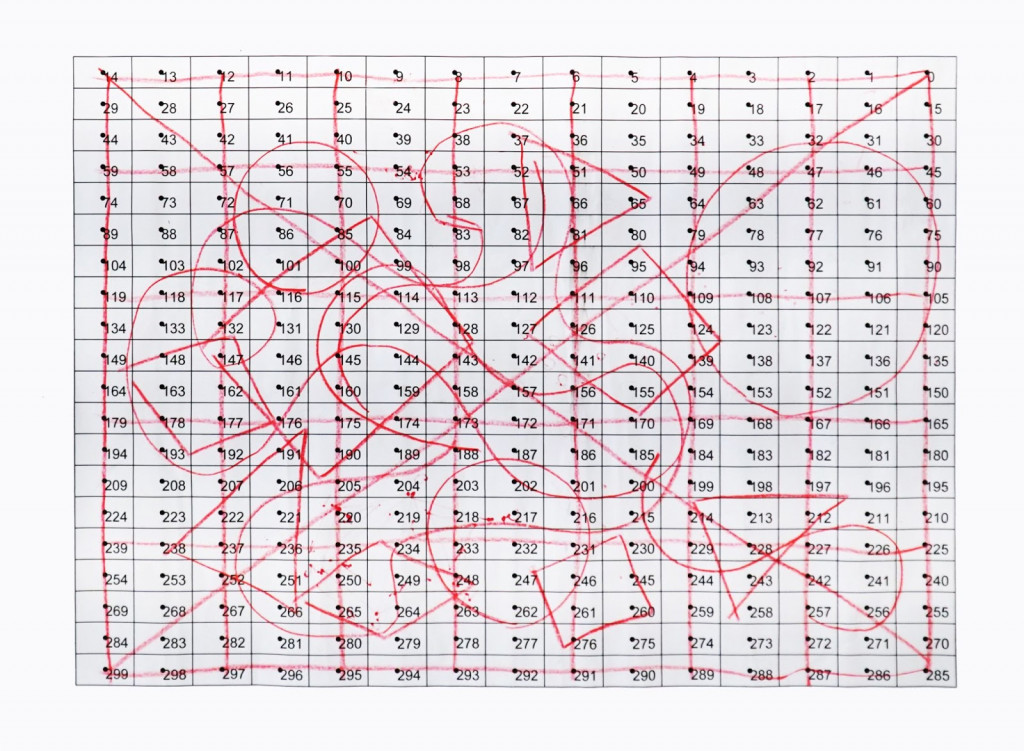

For collecting the data of precise points, grids were used. each grid consisted of 300 points. User area grid size was 400 x 400 mm whereas robot area grid size was 500 x 500 mm.

We decided to use the data in 2 ways:

- To use the grasshopper robot simulation and create the Neural Network (NN). Creating meaningful relation between the input data’s collected and the robot joint data through simulation. UR 10e simulation was used.

- To collect the jogging data by manually jogging the robot on each point. User data was collected in same way, as the user move the hand on each point with in the grid the data were recorded.

Training and Validation

First experiment – Training

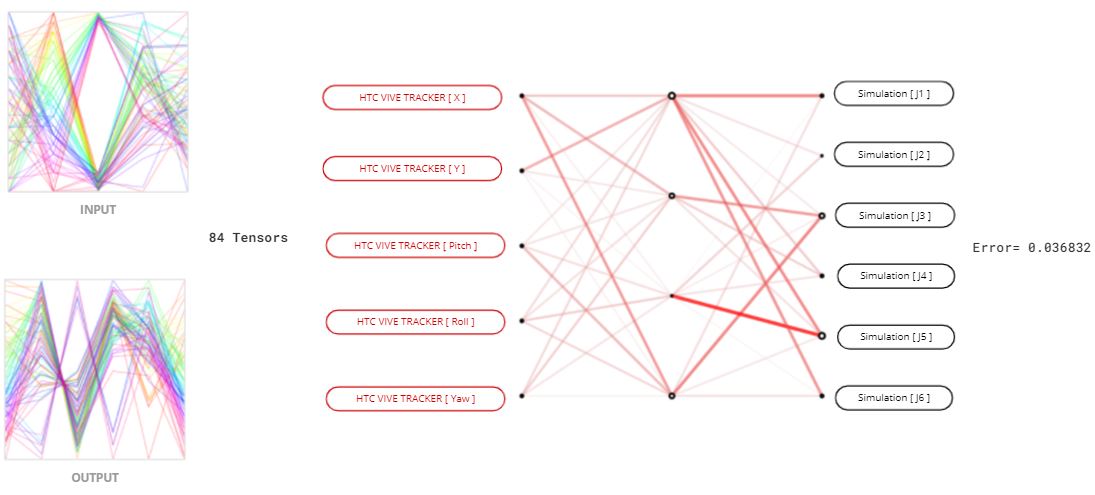

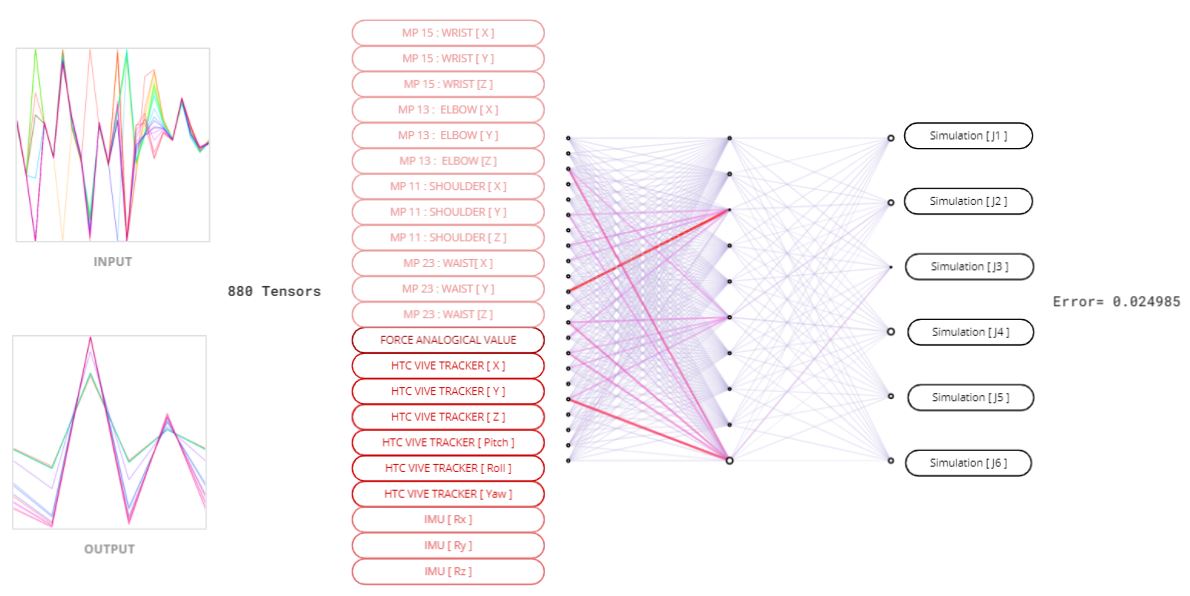

After completion of input data collection and output data, through simulation and physical process Neural network was trained. We used supervised machine learning using grasshopper and Owl plugin. In the process of neural network creation the input and output dataset is converted in tensor set which is fed in owl supervised learning component with some additional parameters such as number of layers, etc.

For first model training only HTC Vive tracker X,Y,Z positions and Yaw, Pitch, Roll data was used. Total 84 tensors were collected from the rosbag file generated.

The output from the first trained m0del was satisfactory with error = 0.036832 (less than 1 is considered acceptable). As the first dataset was small the deviation was visible and with larger dataset the deviation can be reduced.

Second experiment

For the second model training all the input data were used (Mediapipe, IMU, Vive tracker pose and rotation). This was used along with Robotic simulation to get joint data for each grid point and create the relation in the neural network between input and output.

As the second model has higher number of input data, it is important to find which data is more effective or trials of different combination of dataset is required.

In this case the second model requires more training with much higher number of dataset. The error in the neural network was 0.025.

Validation

Validation data was collected after training of neural networks. The data collection was similar to training process. the validation data was used for computing the neural network and check the performance.

Robot See Robot Do // SOFTWARE III is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at Master in Robotics and Advanced Construction seminar in 2020/2021 by:

Students: Shahar Abelson, Charng Shin Chen, Arpan Mathe

Faculty: Mateusz Zwierzycki

Faculty Assistant: Soroush Garivani