RS.4 Research Studio

ADVANCED INTERACTION

Augmented Senses

Enhancing human capacity for empathetic exchange with nature

Senior Faculty: Luis Fraguada

Expert Faculty: Elizabeth Bigger

Expert Faculty, Studio Support Seminar: Cristian Rizzuti

Kick-Off Workshop Guest: Madeline Schwartzman

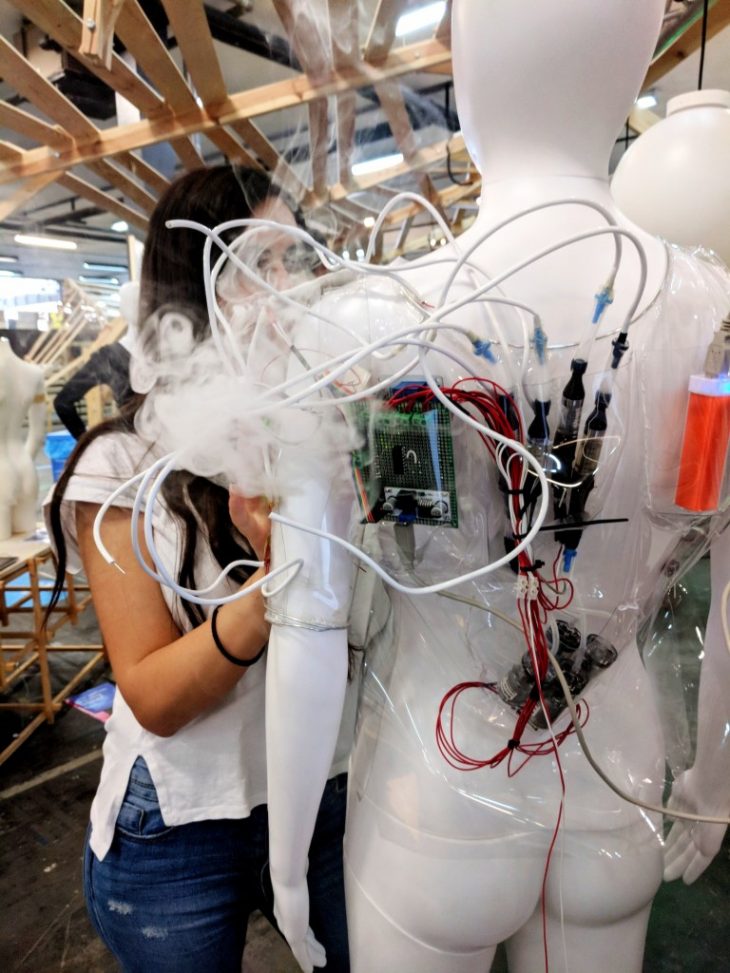

Radiocosmosuit by Agustina Palazzo, Augmented Senses Studio 2018

Overview

What we experience as reality is dictated by the sensory inputs we are equipped with: touch, smell, vision, sound, taste. Every human interaction, every human endeavour, everything that humanity has achieved and caused as a species has been enabled by using these senses to navigate our environment. Other species have evolved similar senses, but with slight variations that relate to their environment. Generally, vision in animals is driven through the reaction of light in color receptors. Humans typically have three kinds of color receptors while the Mantis Shrimp has 12. This allows Mantis Shrimp to see multispectral images and polarized light, which we could say provides a dramatically different way to see the world.Other species have even evolved other senses to deal with their particular environment. For example, bees have positively charged mechanoreceptor hairs in their legs which help them navigate to negatively charged flowers. This means is that reality is much richer and dynamic than what we currently experience.

The Augmented Senses agenda seeks proposals for systems and their related consequences which augment our senses or give us new senses by which we can experience and describe reality. Such systems could also be considered to give senses to otherwise inanimate objects or other species. The theory behind this is simple: sense what we can not already sense in order to widen our definition of reality. By widening our definition of reality we can increase the potential to empathise with not just other humans, but perhaps other species and ecosystems?

The work developed during this studio will be a contribution to the already expanding examples of humans seeking to augment themselves through merging with technology.

An example of an Augmented Sense can be seen in the artist and cyborg, Neil Harbisson.

Neil Harbisson has extended his perception of reality by adding a color sensor to his body. This appendage is fused to his skull and effectively converts color into a vibration such that different colors vibrate at different frequencies. Neil is colorblind, but his impetus for adding a color sense to his body wasn’t to normalize his color blindness, on the contrary, his lack of color sight means that he can discern more shades of grey and is generally more sensitive to depth than people that can see ‘normally’. Adding a color sensor allows him to detect colors in the visible light spectrum as well as beyond what a normal human can see, into the infrared and ultraviolet. Neil discusses that he now shared capabilities that other animals and insects might have, giving him an expanded view of reality that his brain had to learn to accept.

This second edition of the Augmented Senses studio follows a successful series of projects from the first edition and looks to expand on the capabilities of those projects through more rigorous investigation into the consequences of the proposed senses. Studio researchers will design and implement robust body architectures that are ergonomic and wearable for extended periods of time. Throughout the studio we will work with wearable technology experts to develop and refine the project proposals.

The projects developed will be personal in nature and will address an individual agenda (to be potentially combined with a second researcher).

Research Objectives

1. To augment an existing sense or invent an entirely new sense, enabled by physical

computing.

2. To experience and document this sense in such a manner that the consequences

and benefits are discovered.

3. Understand the nature of sensing, dealing with noise filtering and signal processing.

4. Understand existing and developing sensing technology.

5. Learn how to format research into an academic submission to an ACM conference.

All projects will be revised and eventually formatted for submission into the International Symposium of Wearable Computers (ISWC) 2019 conference Design Exhibition.

6. To develop physical computing skills which can enable advanced sensory

experiences and robust data communication protocols.

7. To develop a wearable technology skill set which includes designing solutions which take into account the body morphology in relation to the technical specification.

8. To understand working with the body and within the body. To understand 3D design

with the body and the effects.

9. To collect data and complete data analysis and visualization of the research and trial

periods.

Specifications

Throughout the studio we will take advantage of a host of tools and skills.

TOOLS

- Arduino / Raspberry Pi

- Processing

- Rhinoceros 3d / Grasshopper

- Javascript / Python / C#

- D3js

- 3d body scanning

- Digital Fabrication Tools

MATERIALS

- Textiles, e-textiles, smart textiles

- Soft-sensors

TECHINIQUES

- Wireless sensor networks

- 3D human centric design

- Garment Pattern Drafting and designing for embedding technology in garments

- Data visualisation and analysis

///

I. Kick-Off Workshop: Finding Senses

December 17-19th, 2019, Valldaura Labs with guest faculty, Madeline Schwartzman

The Finding Senses studio kick-off workshop will challenge researchers to discover and augment their senses in the Valldaura Forest Lab environment. Studio faculty will be joined by Madeline Schwartzman,a NYC based writer, filmmaker, and architect who has authored two books on the future of the human sensorium. The workshop will focus on how we can manipulate our own proprioception with simple materials to give us an expanded sense of space and motion. These personal manipulations will be the starting point of reflection for the studio work.

Madeline Schwartzman is a New York City writer, filmmaker and architect whose work explores human narratives and the human sensorium through social art, book writing, curating and experimental video making. Her book See Yourself Sensing (Black Dog Publishing, London, 2011) collects the work of artists, interaction designers, architects and scientists who speculate on the future of the human sensorium through wearables, devices, head gear and installations. Her forthcoming book, See Yourself X: Human Futures Expanded (Black Dog Press, London, November 2018), looks at the future of the human head, using fashion, design, and technology to explore how we might extend into space, plug our head in, or make it disappear altogether.

http://www.madelineschwartzman.com/

II. Term 2 – Concept Development and Prototyping

January – April, 2019

Throughout term two the research studio will focus on defining the project concept and prototyping variations of sensory devices which can be worn for extended periods of time. Each studio time will begin with a ‘pinup’ session, where each student will discuss where they are at within the research in a short time frame. The Studio will then break out into lectures, tutorials and project work time.

Studio Support Seminar: Soft Sensors and Visual Programming

Faculty: Cristian Rizzuti

The studio support seminar will focus on developing new kinds of sensors that can measure different kinds of physiological phenomenon on the human body. The seminar will give researchers an opportunity to consider how their project could benefit from specific sensor development. Visual Programming will be employed to facilitate the understanding and calibration of sensor signals and to provide a platform to develop visualizations and effects based on the sensor inputs.

III. Research Trip: Wearable Technology and Senses, The Netherlands

April 8-14th, 2019, the Netherlands

The Augmented Senses studio research trip will be focused on The Netherlands, where we will travel to visit institutions and practitioners are the forefront of wearable technology research. During the trip we’ll have a workshop with the team of the Wearable Senses Lab at TU Eindhoven.

IV. Break: 19-22th April 2019

V.Production

VI. Final Exhibition

///

Previous Projects

Radio Cosmosuit by Agustina Palazzo

Radio Cosmosuit is a wearable that allows to hear the radio frequencies of the broad spectrum, but most important, of some radiations from the space, for example the one of the sun. With the antenna in the back the user receive the frequencies according with their own movements allowing the possibility to interact with the environment. The radio frequencies are digitally decode in sounds and images in a Raspberry pi.

The End of Emotional Privacy by Martina Soles

This project has been developed as a bodysuit wearable that explores the possibility of being able to read emotions more accurately, using an infrared sensor to simulate thermal vision. This sensor works as a thermal camera that sees in an array of 16 temperatures, which individually notifies the exact temperature of the array to each of the 16 heat pads, precisely located on the back of the bodysuit. Like morse code, but with heat.

Microfluidic Skin by Susannah Misfud

This ‘second skin’ contains flexible microfluidic patches made of silicone that are connected to tubes of cabbage dye, which when mixed with other testing fluids, change the colour of the cabbage dye. A skin able to determine the pH of anything.

Scent Track by Maria Abou-Meri

Can we augment the sense of smell? Can a smell be a trigger to enhance social interaction?

Can a simple touch be translated to a note then smell?

A touch on my arm can say more than a greeting, it can play a note, a smell and a feeling. Fluid, light, shaped, is the smoke and the essence, but also the wearable veins of my body. Through their implementation on my skin up to the highest point of my body and soul, I will define your ID, play your personalized note, emit an essence and engrave our memory.

I believe that the fragrance can go beyond a signature, as it stimulates feelings which is based on people, and on social interaction. Trace your hand on my shoulder so your smell can sparks a flurry of emotional memories.

ScentTrack is this new social experience, and the reality outside the boundaries and limitation of material and energy.

Dystopian Places by Yiannis Vogdanis

Dystopian Places is a compilation of wearables that when interacted with simulate various environmental problems. The simulations allow humans to experience what the natural world around us is experiencing as a result of our actions. For example, to address the problem of water hypoxia, a mask, once worn, will restrict the airflow the wearer is receiving. The reduction in oxygen will mimic the oxygen deprivation of a selected area on a virtual map. The human wearing the mask will then understand what it would be like to be a fish living in an area with water hypoxia. Sometimes, we need to feel to understand. Dystopian Places is designed to have an impact that surpasses traditional forms of learning. We are at point where action needs to be taken, at a large scale. Dystopian Places, hopes to be a piece of the puzzle in moving towards a more environmentally conscious world.