Abstract

The Manta is a project attempting to experience surroundings from a 360° perspective. Take a look around the room – what do you see? All those colours, the walls, the windows – everything seems so self-evident, just so there. It’s weird to think that how we perceive this rich milieu boils down to light particles – called photons – bouncing off these objects and onto our eyeballs.

This photonic barrage gets soaked up by approximately 126 million light-sensitive cells. The varying directions and energies of the photons are translated by our brain into different shapes, colours, brightness, all fashioning our technicolour world.

Wondrous as it is, our sense of vision is clearly not without certain limitations.

In the current human capacity, more than half of our environment is not perceived because our current optical system which has a form of visual acuity present in all living creatures. Few creatures such as chameleons are both monofocal and bifocal while being able to rotate its eyes like an extended eye and experience the world in a different manner. The origin of the idea behind the project deals with myself, trying to deal with various problematic encounters in and around my surroundings due to lack of not being able to see them. This experience is created by the devices live feed from two 180° degree cameras connected to raspberry pi and then transferred to two HDMI screens for visual feedback.

Motivation

The motivation for the Manta project comes from a set of two experiences with different set of events sharing a common base of vision based incidences. Underwater diving provided the experience of stereoscopic acuity due to the helmet worn while diving. A secondary event of being in a tree unaware of a bear crossing beneath the branch I occupied.

Both incidences of lack of proper vision led to the desire to sense or view my entire surroundings. I discovered that the stimulation of the visual system plays a principal role in engaging the senses, secondary the sense of hearing needs to be taken into account. Smell is a great importance, however, is not currently considered in many virtual reality (VR) systems due to difficulty in implementation. Visual information is considered the most important aspect in creating the illusion of immersion in a virtual world and sensing the environment around.

Project Description

The Manta as the wearable is about capturing the frames around us and immersing them in an environment to augment the sense of direction and spatial depth. By physically engaging the spaces with the new found vision we would be able to understand the interaction and orientation behavior of the user. This part of information is important for the brain as being particularly important for navigation and spatial memory. The prototype would enable a new form of interaction between users and their surroundings.

Research Claims

Claim #1: Change in sense of direction and spatial depth will occur.

Claim #2: The new visual medium will not cause a sensory overload after a period of time.

Project Research and Development

The project began by determining the type of visual interface was needed to experience the panoramic experience, and to attempt to understand the surrounding of spatial and directional awareness. After researching reflective surfaces and stimulation based sensing, the conclusion of computer vision was reached for project development. Since the project is vision based the initial prototype of a headset. The initial iteration idea was to use multiple cameras with raspberry pi using openCV to stitch them and create a panoramic interface. As a base to formulate basic python programming and digital fabrication for wearable headsets were studied. The later iterations took shape through the study of various living creatures and how they experience their reality through their vision. Various animal vision systems, brought in the idea of having multiple cameras and a single interface for all of them. Later the project took shape in the form of technical limitations and which led to the final shape of the prototype to function in an ergonomic and aesthetic design seamlessly blended.

Sensing Phenomenon

Eyes in the back of your head – a headset that gives you a 360-degree field of vision.

The Manta, system captures images from every direction around the wearer, transforming them into something human vision system can comprehend. The Manta’s visual system takes 15 to 20 minutes to acclimate to and after that you sense a new form of direction, space and surroundings. The users orientation augmented as the brain is attempting to comprehend the information from the new visual system it is receiving data from.

Trying to correlate the spatial environment is difficult as the depth of the surroundings around will change with movement. Understanding the newly found spatial depth of vision and correlating the user to their directional sense is the way to understand and experience this newly found vision. The stimulation of the visual system plays a principal role in engaging the senses and is an effective platform to have a sense of surroundings.

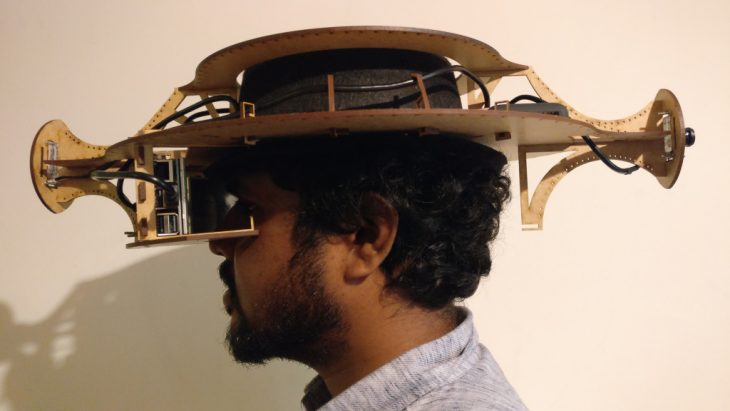

Concept Development – First Iteration

The Manta started as a project for which the end goal was to attain an extended view to stimulate the visual system to attain higher sense of spatial relation and direction. The objective of the first iteration was to explore through simple prototyping of the project. The input was multiple cameras and the output was the camera feed. The project’s initial stage started with just a hat hosting all of the components which was put together such as a raspberry pi with two pi-cameras connected. Through an HDMI adaptor I connected two screens and relayed each pi-camera’s feed into two screens and tried to experience that vision. The cameras were placed at different locations on the hat along with a raspberry pi and two HDMI screen were placed in front of the eye attached to the screen. The main issue was the project became too technical and there were a lot of wires and hardware involved for the camera, the battery and the HDMI screens, which had to be reduced or integrated further in the next iteration.

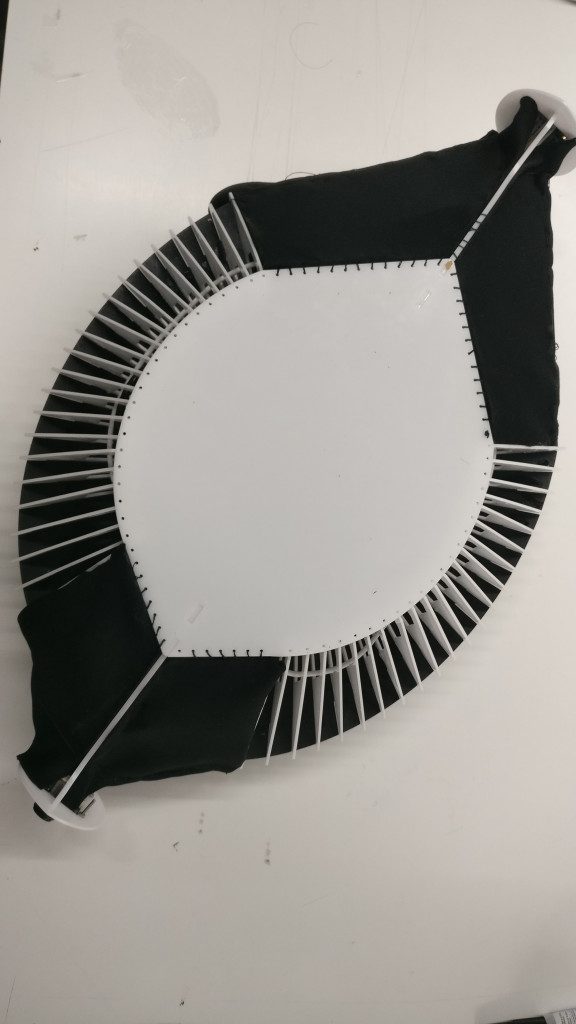

Design Development – Second Iteration

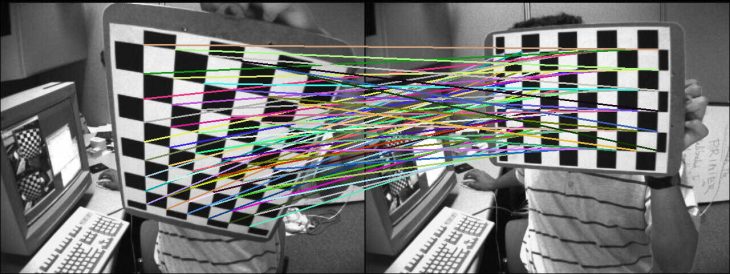

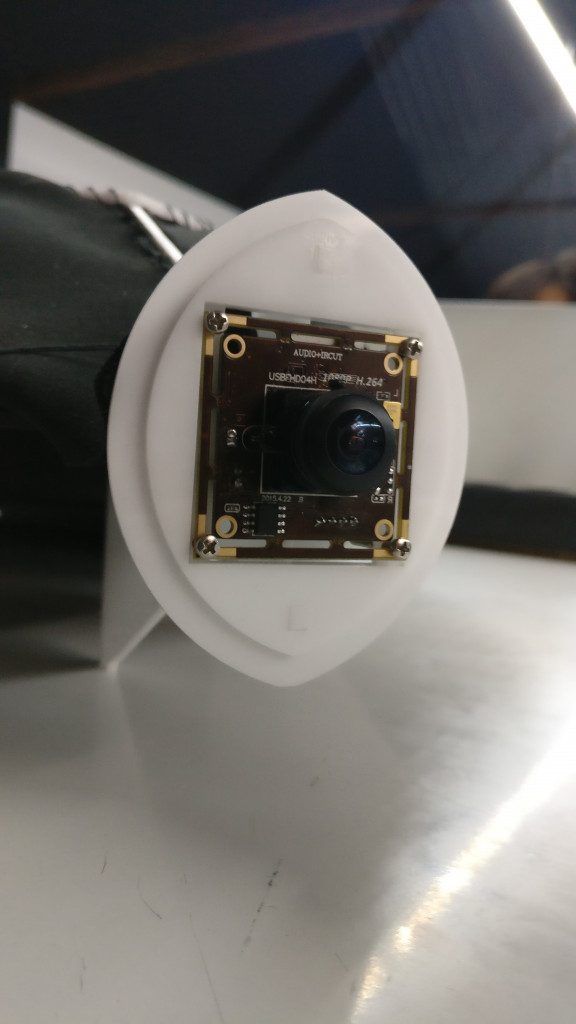

Three 180 degree USB cameras placed at 120 degrees each with the attempt to stitch the three cameras together using the 60 degree overlap between each camera. The hat shape of the prototype changed to a triangulated manta shaped form to become the second prototype. The feed from three cameras were not able to be stitched together but also were a problem for processing of the code since the streaming took a lot of processing power. The prototype was made using an MDF as a planar surface to hold three camera holders fabricated by laser cutting. The battery was placed behind the head in order to act as a counterweight for the raspberry pi and the display screens. The technical part of the project was creating issues as the stitching codes were causing processing issues. The code was written in open CV and tried to use improved FPS processing rate Python classes to access the builtin/USB webcams to tackle the issue. Performing keypoint detection, local invariant description, keypoint matching, and homography estimation is a computationally expensive task, stitching is needed on each set of frames, making it near impossible to run in real-time.

Once the cameras were fixed and not moving, the homography matrix estimation is run. After the initial homography estimation, the same matrix is utilized to transform and warp the images to construct the final panorama — doing so enables opting out of the computationally expensive steps of keypoint detection, local invariant feature extraction, and keypoint matching in each set of frames.

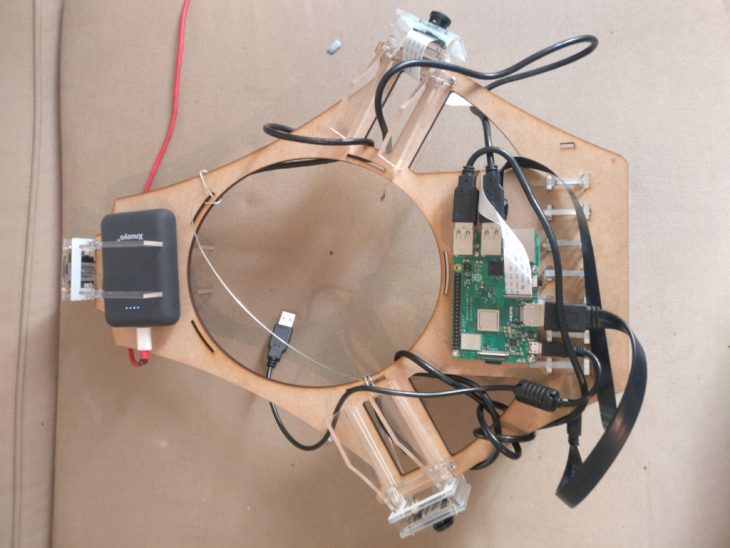

Production Development – Third Iteration

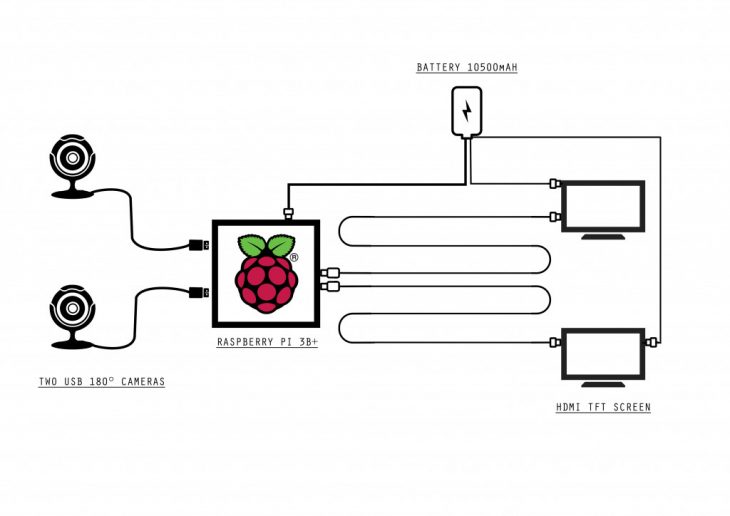

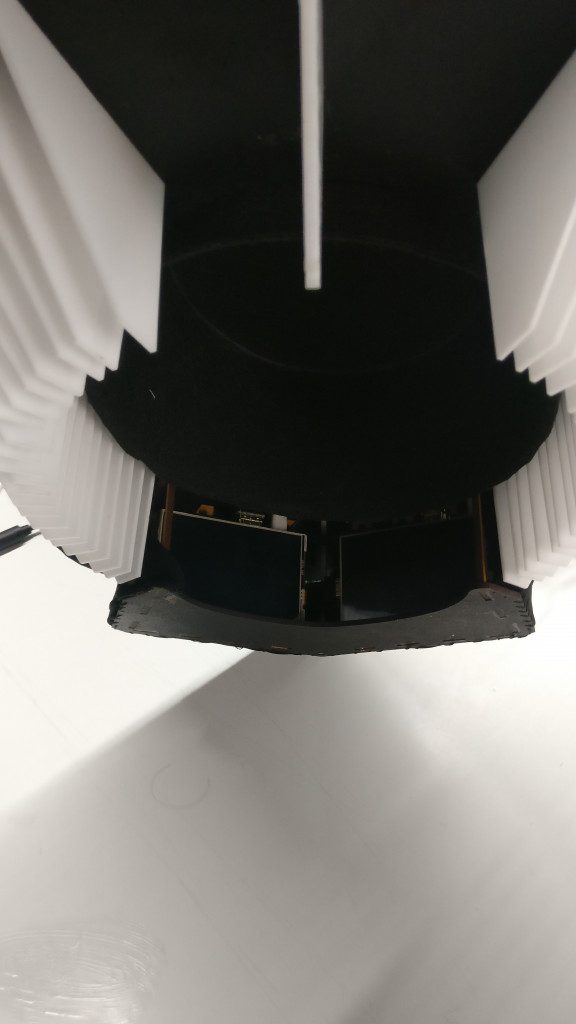

Since the task of stitching the feed together became a expensive computational task, the idea to move forward was to make the final prototype simplified. So for the third iteration the camera count was reduced to two being placed at opposite in front and back at 180 degrees each. The wearable device was made MDF to hold all the cameras, screens, a battery and the Raspberry Pi board.

The output of each camera is relayed in real time to its individual screen. This gives an extended viewing capability although not a panoramic screen. The circuitry is integrated within a textile cover.

Circuit

Final Iteration

Data Collection

The data collection involved getting to write down the experiences and documenting the understanding of visual system simulation to the brain. The visual feed of the camera is always recorded. The other part of the data collection was to note the amount of time getting used to the new sense and how adaptation occurred to different kinds of interaction.

There were two phases of data collection:

First phase involved the experiencing and adapting to the vision and writing about it. In the first phase only 180 degrees of the surroundings was captured and relayed into the screens. The new vision caused me a sense of overload even stress and lose a sense of direction even though it might affect your direction sense in the initial usage. By wearing it for an extensive period of time the direction and orientation sense started to process the information in an understandable manner.

The second phase involved interaction with objects and people surrounding me. It was to understand the depth of the new vision and how the movement works. Since the interface has a quite bigger field of vision the understanding and the spatial memory of the brain had to adapt. The data collection involved recording of the movements and interaction while walking on the street and inside living spaces.

Conclusion

he Manta project enables an alternate sense of spatial understanding and interaction through the visual medium. Stressing again on the importance of simulation of the visual system plays an important role in engaging the senses and understanding the space and interaction with the user’s surroundings. Human intuition starts to work with the newly found vision and gets used to within 15-20 minutes and react to the space.

The Manta as the wearable is about capturing the frames around us and immersing them in an environment to augment the sense of direction and spatial depth. By physically engaging the spaces with the new found vision we would be able to understand the interaction and orientation behavior of the user. This part of information is important for the brain as being particularly important for navigation and spatial memory. The prototype would enable a new form of interaction between users and their surroundings.

Acknowledgements

I would like to thank Elizabeth Bigger and Luis Fraguada for guiding us in a direction from

architecture to wearables in a really comfortable way. The planning and documentation guidance by them has helped us move forward with the project in a well sorted manner. There were tons of resources and references provided at every stage of progress to get inspired and learn from. Also would like to Christian Rizzuti for providing more technical insight which helped us

a lot in building the project. Thanks to Adrian, The owner of pyimagesearch whose guiding also helped me in the programming part.

Augmented Senses Studio – Master of Advanced Architecture

Institute for Advanced Architecture of Catalonia

Tutors: Elizabeth Bigger and Luis Fraguada

Physical computing expert: Christian Rizzuti