Plant_D is a rover-based workflow for city-wide application of image processing and machine learning for plants within the city’s landscape.

The workflow works around the multi-node system with the ROS middleware framework.

In Barcelona, parks are assigned a level of maintenance based on several parameters:

- Frequency and cultural relevance of green space

- Topological characteristics of green space

- Typology of plantations

- Severity of disease/ plague

“A comprehensive control of pests is done on all trees, even though the implementation of ecological phytosanitary treatments and biological control it’s slow.”

Industry Examples

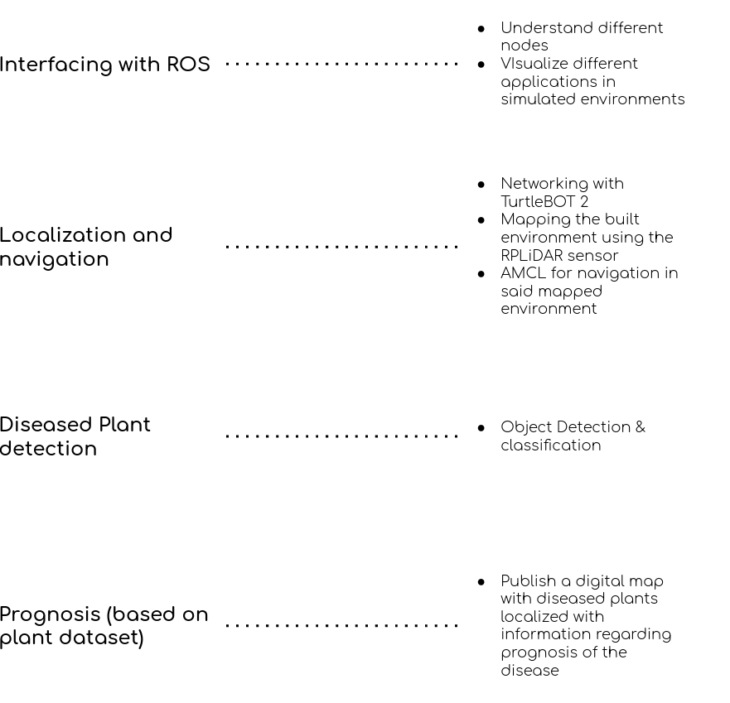

Objectives

Pseudocode

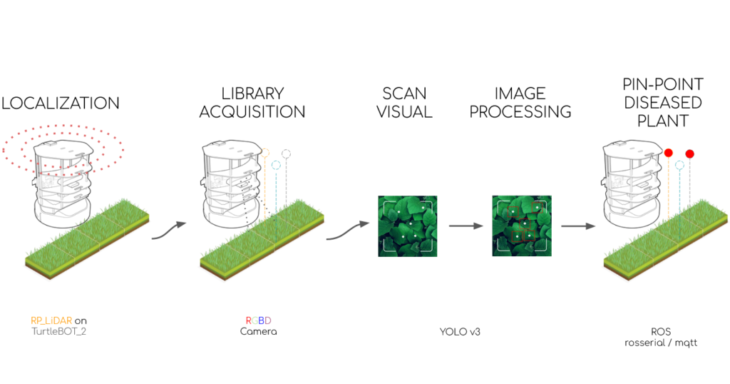

The pseudocode describes the step by step processes for the workflow for this project.

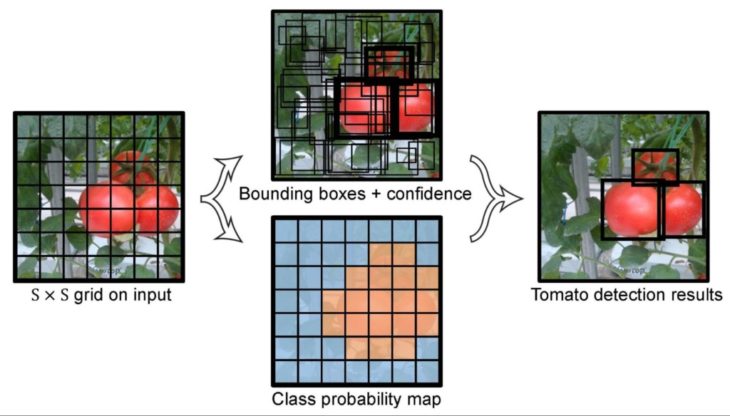

It begins with localization of the rover in its environment which in turn helps it being autonomous (with regards to navigating around the environment). We then begin with gathering data for our plant library for easy classification later. This library then goes through the YOLO algorithm for processing to detect healthy/unhealthy plants.

The data is then relayed back on the digitally scanned environment with library information to mark out the deceased plant.

Let’s further understand the nitigrities of each subsection of the pseudocode.

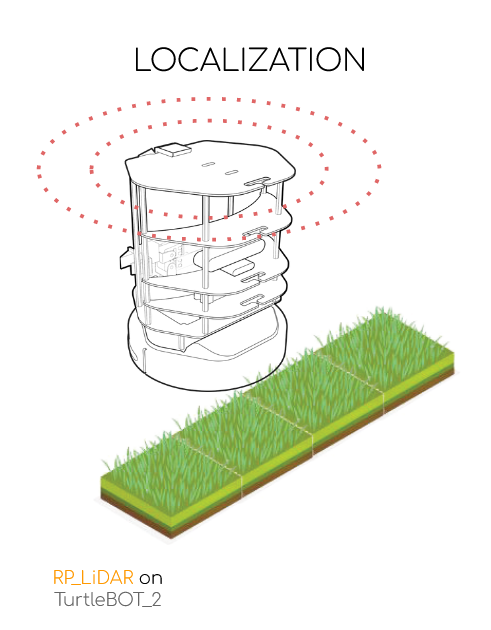

Localization

The process of localization of the TurtleBot2 includes two main parts:

- G-Mapping / SLAM G-Mapping

- Navigation (AMCL) [

http://wiki.ros.org/amcl]

It takes two computers and a 2D-LiDAR sensor on the TurtleBot2 to map and navigate the scanned map.

Mapping Workflow

Terminal ping nuc

ssh -Y iaac@nuc/nuc1*

Terminal 1 sudo date Terminal 2 sudo date

sudo date –set=”…” sudo date –set=”…”

terminator

Terminal 1 cd turtlebot_ws

source devel/setup.bash

roslaunch turtlebot_bringup minimal_lidar.launch

Terminal 1 cd trtlbot2_ws

source devel/setup.bash

roslaunch turtlebot_navigation turtlebot_gmapping.launch

Terminal 2 cd trtlbot2_ws

source devel/setup.bash

roslaunch turtlebot_teleop keyboard_teleop.launch

Terminal 3 rviz

Mapping Success

Save map before closing GMapping node

Terminal 4 cd trtlbot2_ws/src/turtlebot_apps/turtlebot_navigation/maps

rosrun map_server map_saver -f <name of your map>

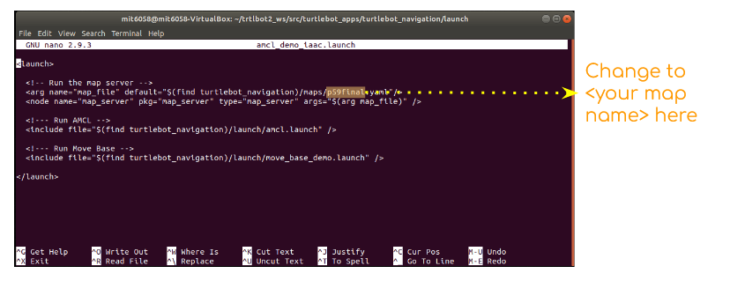

Navigation Workflow

Terminal 1 cd trtlbot2_ws/src/turtlebot_apps/turtlebot_navigation/launch

sudo nano amcl_demo_iaac.launch <to open localization launch file>

Terminal 1 cd trtlbot2_ws/

source devel/setup.bash

roslaunch turtlebot_navigation amcl_demo_iaac.launch

Terminal 2 rviz

Add : From topic > global costmap > map > Laserscan

From rviz > TFs

Library Acquisition

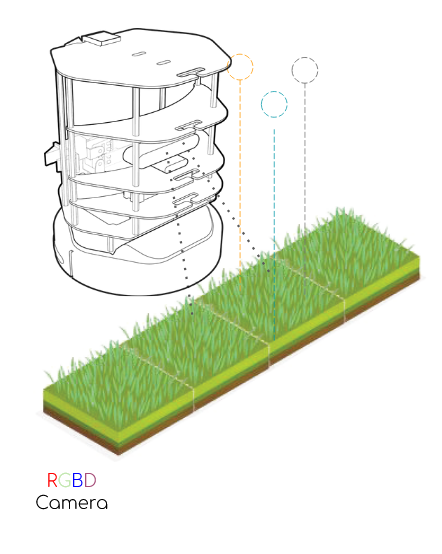

Plants recorded using a RGBD camera on-board the TurtleBot2 for processing.

Processing

The next step in the process was to scan through acquired data and process using YOLO v4.

Dataset: 2000 images of healthy and unhealthy plant images collected from Google Images, reddit, kaggle

[

https://www.kaggle.com/russellchan/healthy-and-wilted-houseplant-images/version/1?select=houseplant_images]

Code: Training-weights, training code, labels, config files were pre-prepared.

[

https://github.com/apoon2/Plant_Health_Classification]

Remote computing: Used to run the code remotely using GPU on Google-cloud, The AI Guy

https://colab.research.google.com/drive/1xdjyBiY75MAVRSjgmiqI7pbRLn58VrbE?hl=es#scrollTo=M_btEC1N-YkS

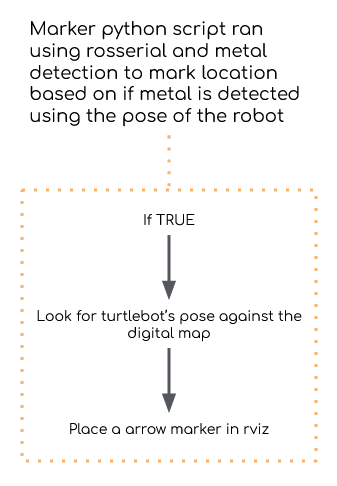

Mapping Deceased Plants

The data from processing is then relayed back to the bot which runs a python script built to be able to detect the pose of the robot in the scanned environment and mark it on the digitally scanned map.

Plant_D is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at Master in Robotics and Advanced Construction in 2021/2022 by:

Students: Andrea Nájera, Jordi Vilanova, Libish Murugesan, Mit Patel

Faculty: Carlos Rizzo

Assistant: Vincent Huyge