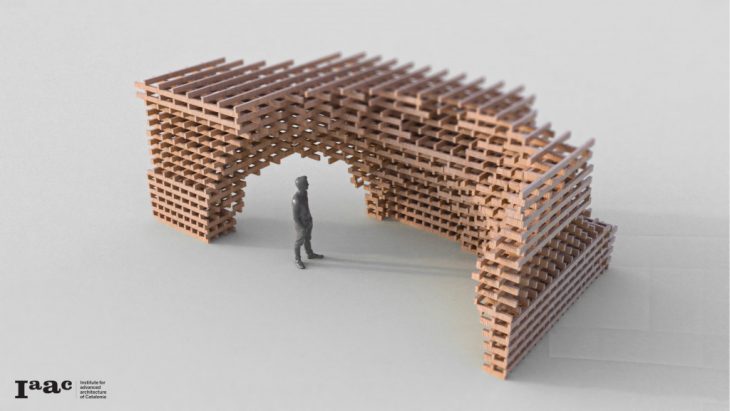

This is a further exploration of a previously made research (link) and focused on after material collation phase, how the collated discrete elements can be used in architectural design.

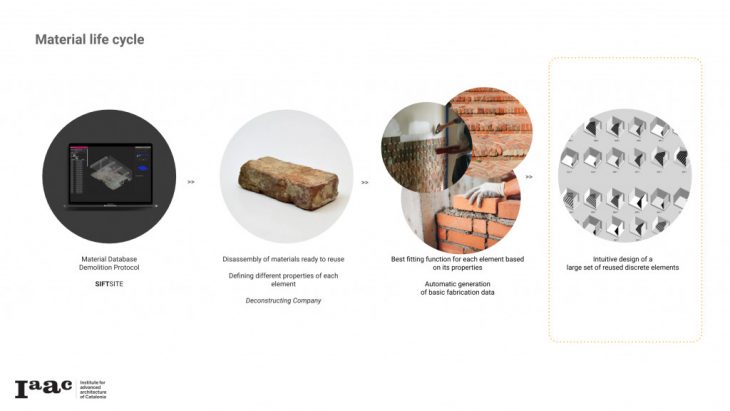

The research starts by creating a digital database, define the properties of the reused material and the new purposes it can have, and finally stablishing a new design workflow that can help architects incorporate reused construction material in their designs.

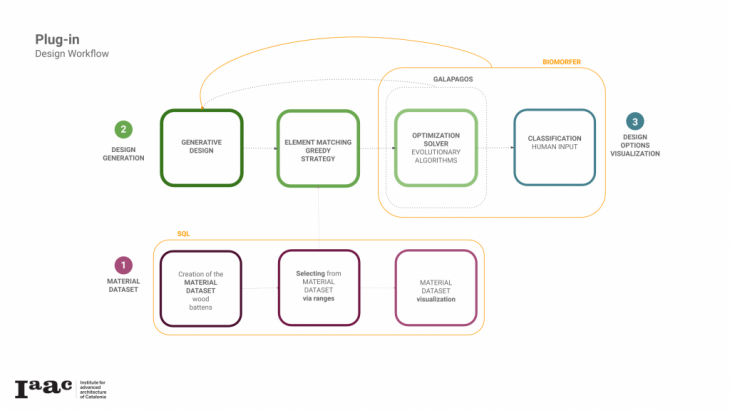

The work that we have developed has two main parts, the dataset creation and the design generation.

The study case that has been developed starts in the hypothetical future scenario where Atelier’s ceiling is refurbished and part of the old wood battens can be recover for future use.

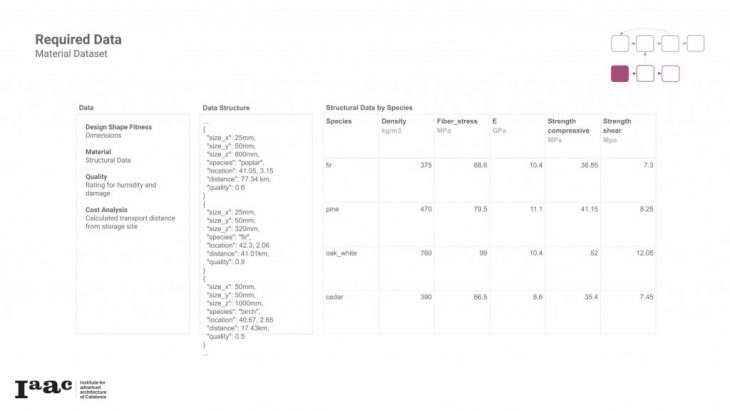

Given this expected source of data, the element characteristics we decided were most useful to search by included the physical dimensions, material source and species which describe ideal structural parameters, an abstract quality rating representing humidity, insect, or other damage that would reduce those ideal parameters, and a cost rating based on calculated transport distances of the material. Source

Source

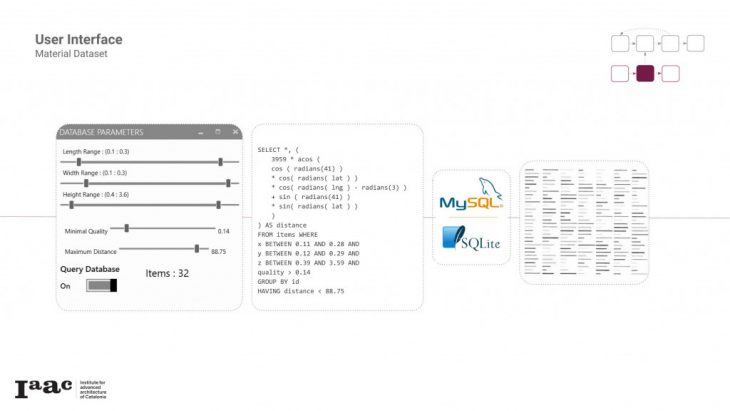

As an initial source of design control, we implement a user GUI to allow for selection of specific slices from the total dataset, setting minimums and maximums for different parameters to work with. Practically, this take the form of queries to an SQL database where the total dataset is stored. We chose SQL as its well suited for querying with multiple overlapping restrictions, and can be run locally for testing or networked once scaled up.

As the user selects with the GUI, a real-time preview of the material they will have to work with is displayed organized by different parameters.

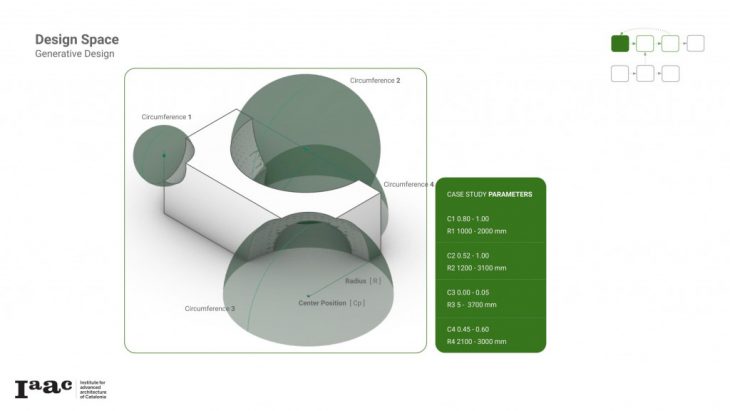

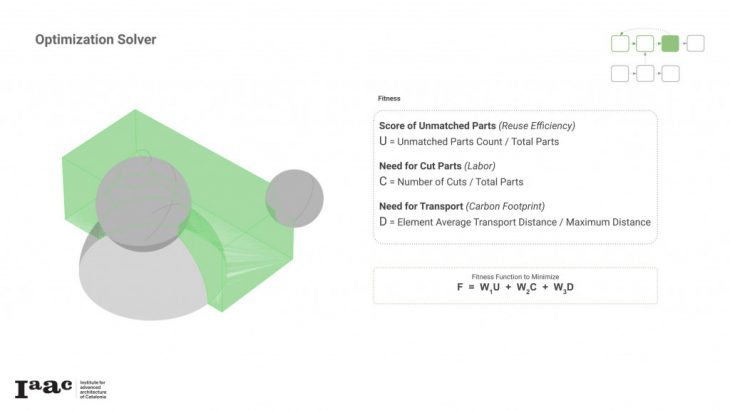

The generation of the design is done through 3 steps. First a simple design space is created allowing to focus the research in the definition of the matching algorithm. The optimization solver takes places in order to find the best solution given various parameters (cost, material waste…)

Definition of the design and identification of the phenotypes that want to be optimized.

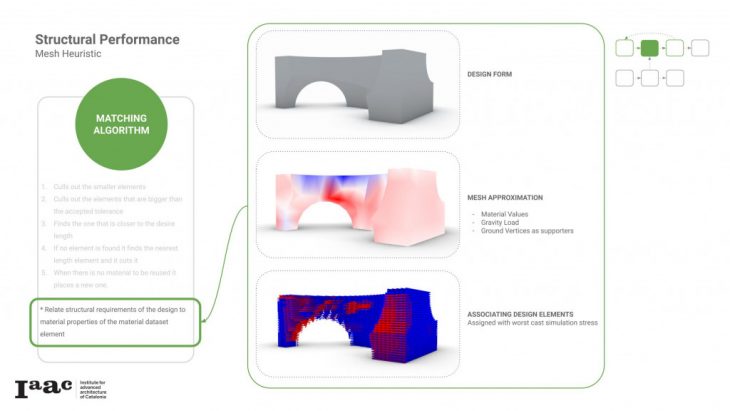

In order to relate the material with the design, we need to build a language that understands the construction constraints. A grid is created and the length of the elements is the one that is taken to process in the matching algorithm.

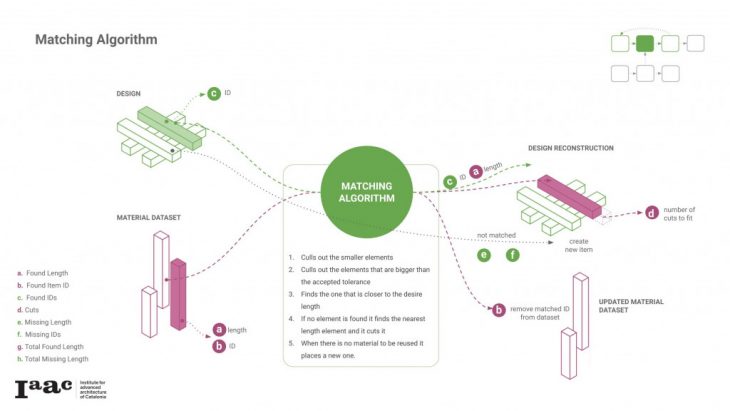

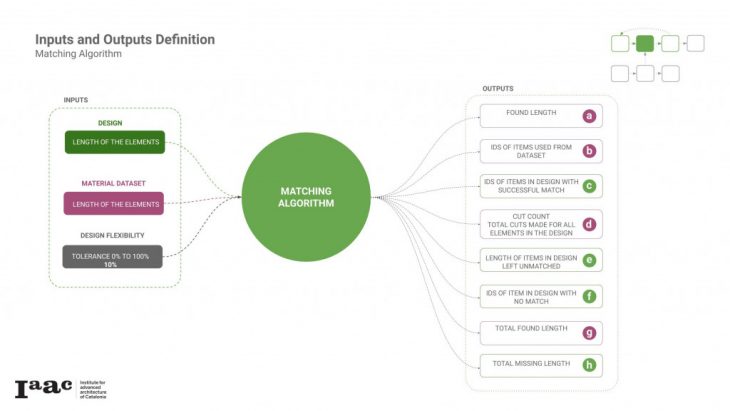

We have the length of the elements coming from the design and the material dataset as inputs for the matching algorithm as well as a tolerance of 10 percent. The outputs that we are getting from the matching algorithm are then used for the optimization and the shape reconstruction of the element. And the matching is working mainly through the lengths and ids of the pieces in design and the dataset.

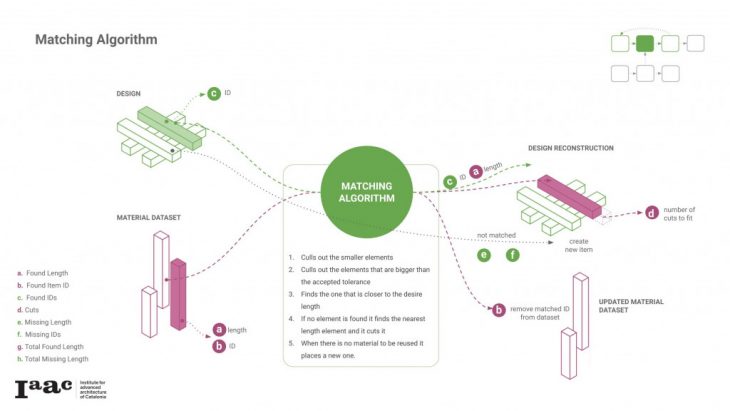

The algorithm is taking the length of the needed piece from the design and it is matching it with the length in the dataset to first use it in the design structure but also to delete the element from the left materials in the dataset. For the ones that is not exactly matching but can fit in the design, the algorithm will cut the batten and place the left part back to the material dataset. For the materials that there was no match, a new element, from outside the material dataset, is going to be used. With the optimization solver we are minimizing the amount of cuts and outer materials.

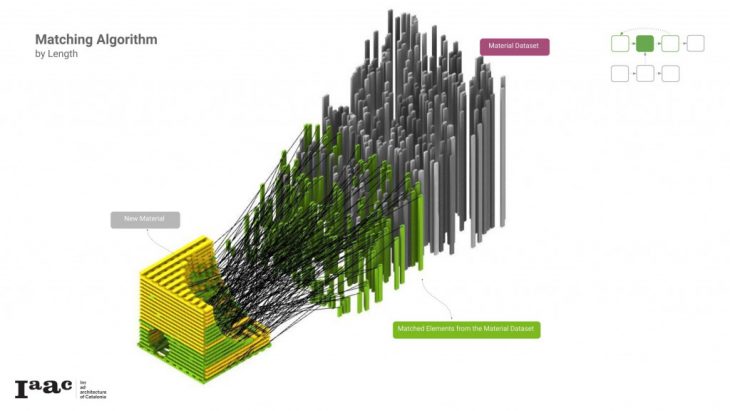

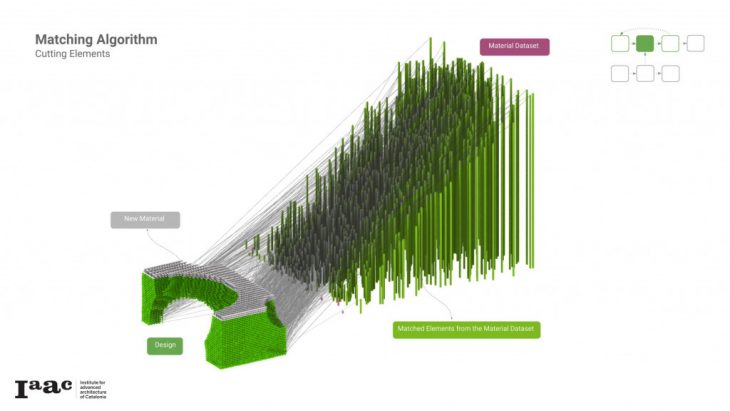

First matching algorithm that we had can be seen below. There was material that it could potential be reused in the material dataset but the design was already asking for new material.

We improved the algorithm by allowing to cut material in case none was founded. As we can see, the design asks for new material when the one from the dataset has finished. To improve its performance we could start relating the quality of the material to the structural dependencies. Meaning that new material will be place where higher forces appear and less quality material will be used as a filler.

We also added an initial source of structural analysis for the designs via Karumba. Only using it for broad estimates right now, we use its shell analysis functions on the original solid design brep. Within Karumba, this gets converted into a reduced mesh, with wood structural values, and a simple gravity load. Each final stacked element is then associated with a position on this mesh via proximity, and given a relative displacement value accordingly.

There were three primary factors that we chose to judge the designs by. Firstly of course we want to make the deepest use of the available material, so we want to reduce the number of unmatched parts in the design. Secondly, we want to require as little additional labor at construction time as possible, so we want to reduce the total amount of material cuts needed. Finally, the construction should be mindful of its carbon impact, so we want to reduce the needed transporation distance for all the included elements. All of these factors are normalized, weighted, and combined as a fitness function to be minimized.

Post Collation Design Exploration is a project of IAAC, Institute for Advanced Architecture of Catalonia developed in the Master in Robotics and Advanced Construction 2019/2020 by: Students: Anna Batallé, Irem Yagmur Cebeci, Matthew Gordon, Roberto Vargas Faculty: Mateusz Zwierzycki Assistant: Soroush Garivani