PROTOTYPING THE ORGANIC

AI in Design Workflows for Complex Forms in Nature

The conception of a new design or building is arguably the most creative stage of a project and one that can be most influenced by inspiration from the world around us. AI algorithms are being increasing implemented to generate inspirational and creative images, however the extent in which this can be further used to create workable designs is always in question. This paper explores how these algorithms can go beyond creating provoking images to be implemented in a wholesome design work-flow that allows non-technical users configure and output rationalised organic forms rapidly for concept development.

Key words: DC-GAN, web configurators, complex forms, visualisation

Can the process of designing complex forms for architecture be simplified through AI to enable less technical skilled users to move between inspiration to prototype rapidly? How will the future combine the creative and analytical capabilities of AI for design apps? As machine learning becomes more advanced, creative concept work-flows can be streamlined to single platform to output complex analysis and feasible design options to the average user. Investigations are undertaken on the potential for these work-flows to develop within the design and AEC industry. The objective of this research is to explore whether a user with limited technical or CAD knowledge prototype and analyse a complex design at concept from a inspirational image from nature. From a selected image or text and use of AI, the average user can rapidly produce an organic form and be interactively rationalised through Physics simulation, environmental analysis, K-means clustering and other algorithms. These are visualised in the web and can be fully downloadable as a model for further processing or prototyping. The outcome is a web interface providing the ability to interactively configure geometric complex design while being provided with immediate feedback on environmental analysis and constructability details will rapidly speed up the design process on a very common typology. As the technology develops these processes will be able to be run interactively through single web interfaces or directly through mixed reality devices.

THE PROBLEM: Beyond inspiration

There has been a recent proliferation in the use of 2D GAN algorithms to create concept images based on text and image prompts on web apps such as Mid-Journey. Social media feeds have been flooded with this kind of imagery recently. However, beyond exciting and inspirational image generation questions often asked include: what the next step? How useful is this? or how can three-dimensional architectural designs be created from these? The use of this technology beyond inspirational imagery requires further investigation in order to be rationalised and prototyped into working designs.

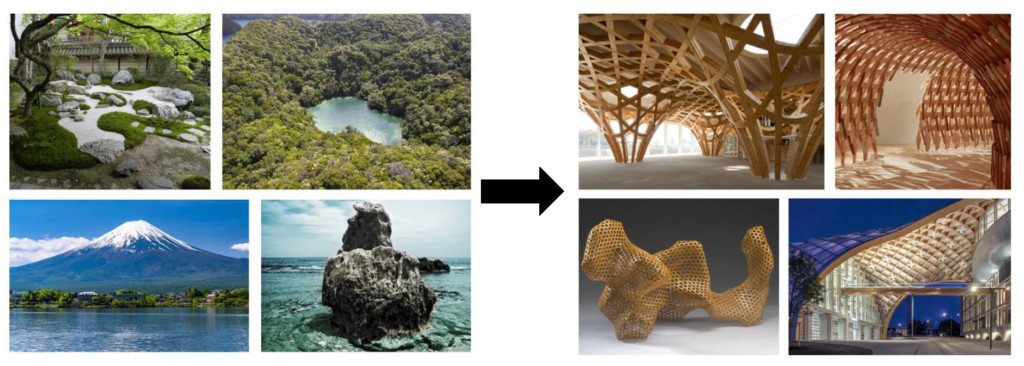

Many architects and designers take inspiration from nature for their designs and interpret them in vast different ways from the philosophical, scientific, the poetic and to more literal organic form extraction. It is not uncommon to look at objects or natural artefacts for inspiration at the start of the project although the steps between a photograph and a feasible concept design are much more complicated complicated.

METHODOLOGY: AI Model to Prototype

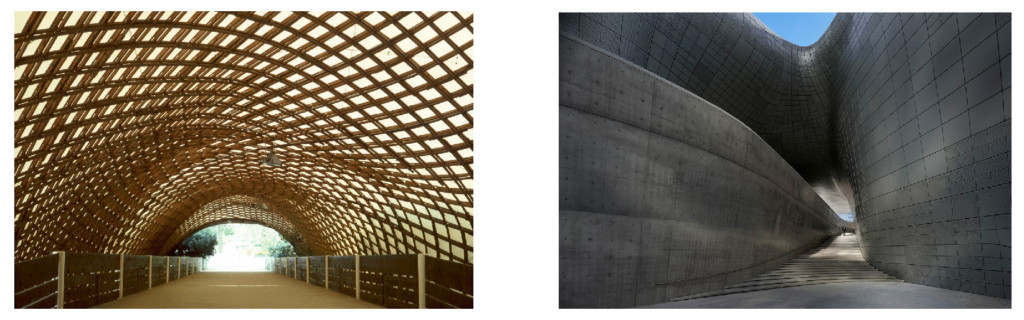

Due to this enormous variety of interpretations and design potential, for the scope of and demonstration for this project, the focus is towards proposing a workflow for interpreting natural organic forms into freeform panelised and wooden structures. These are common strategies for rationalizing solutions for these fluid forms and particularly express the form. Engineered wood products also have a direct connection with nature through its materiality and are often celebrated in structure.

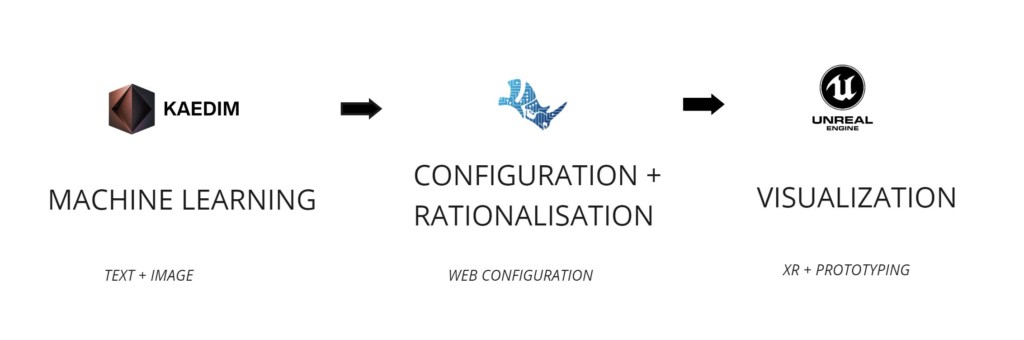

This project approaches this workflow in three parts. The first looks into the use of various machine learning algorithms such as DC-GANS which diverge from just 2D imagery to generate the input for the architectural design. The second part looks at taking AI generated forms and allows the user to assert their agency in real-time to configure and rationalise the design to output basic construction data and diagrams. The last section explores the potential to take this process beyond the 2D screen for a more immersive design experience utilising XR and prototyping technology.

CREATIVE AI: Form Generation

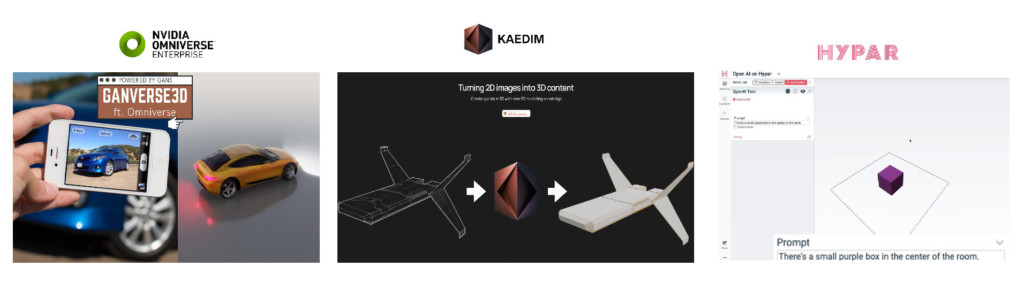

In regards to developments utilizing deep convolutions GANs to create 3D assets from image inputs, there has recently been promising progress. Nvida for example has developed workflows utilising GANs to create fully texture, lit and models of cars from just a photograph and more recently the startup company Kaedim offers a professional service focused for video games in rapidly converting 2D images into 3D models. Another interesting example is from Andrew Heumann, software developer at Hypar (an online plafotm for builing generation) where he explores the ability to create spatial objects and scenes from text prompts as soon on the far right.

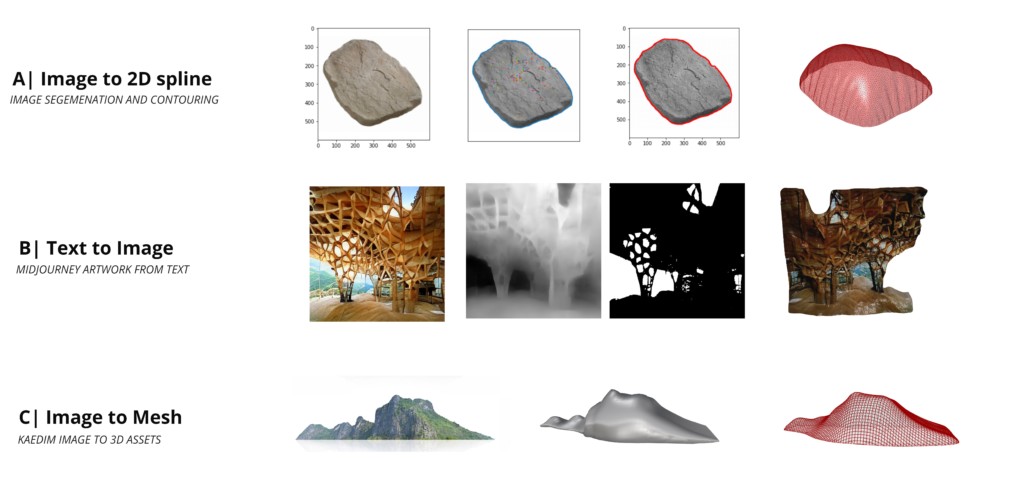

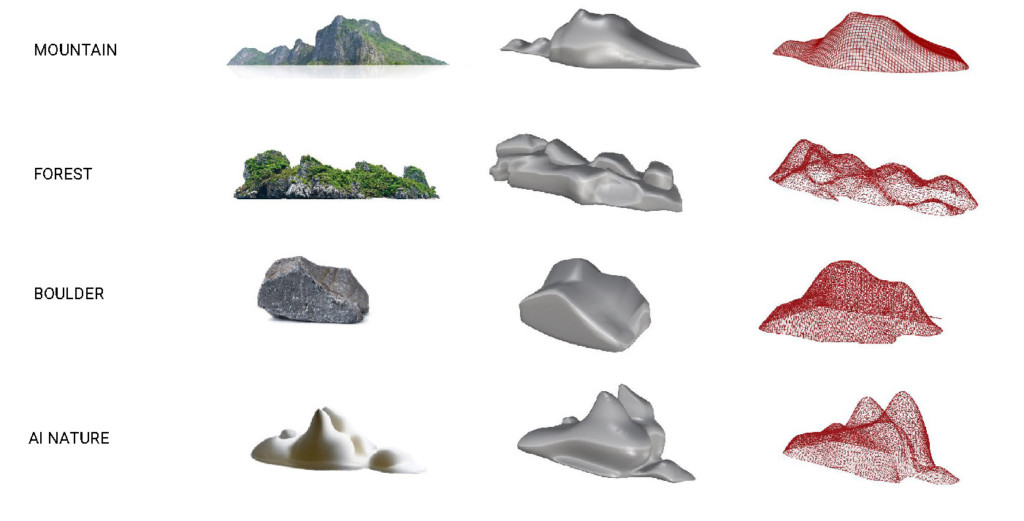

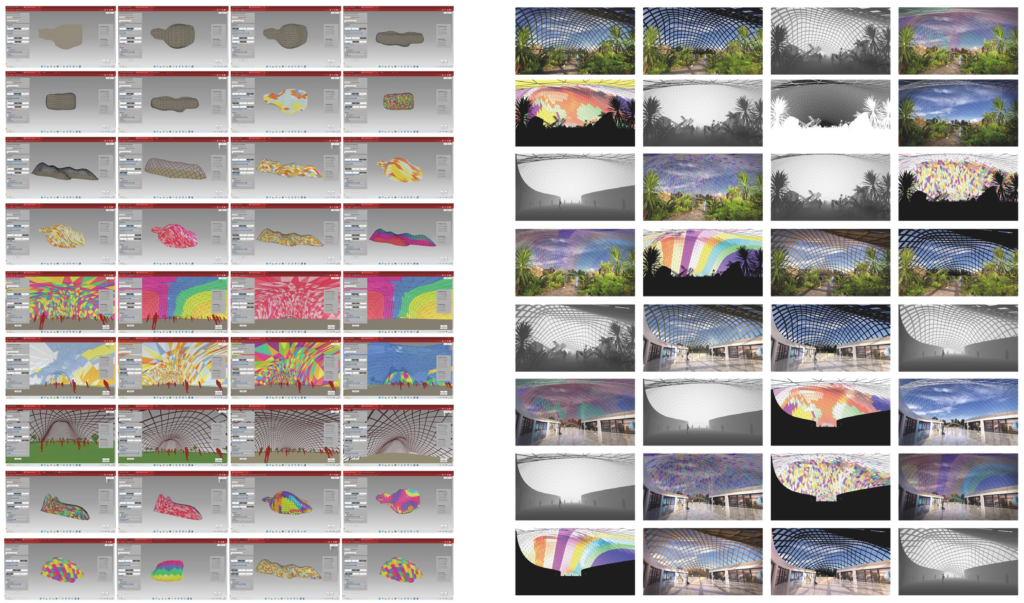

A few investigations were undertaken to explore how a 2D image could be made into an architectural form. This included looking at image segmentation to contour images and using physics simulations to inflate them, using monocular depth perception to produce 3D models from the typical GAN generated images and using Kaedim to convert images of nature into meshes and rationalise them also with physics simulations.

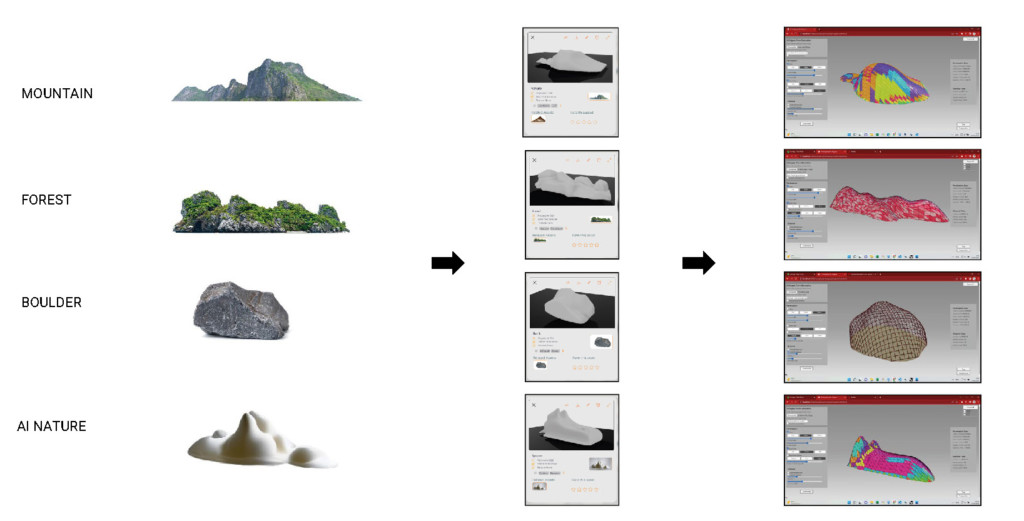

The most promising workflow was utilizing Kaedim’s web app due to its ability to create topologically clean and closed meshes which do a good job of approximating the image. This mesh would also provide a good base input for further architectural manipulations and rationalisation with 3D software such as Rhinoceros.

CONFIGURATORS: Interactive Web Interfaces

With the base AI form generated the next step would be to assert the users agency and manipulate it to become architectural feasible. A web app has been developed for proof of concept, which uses the GAN generated meshes and provides the user the ability to configure the form between panelized and gridshell types as explained earlier.

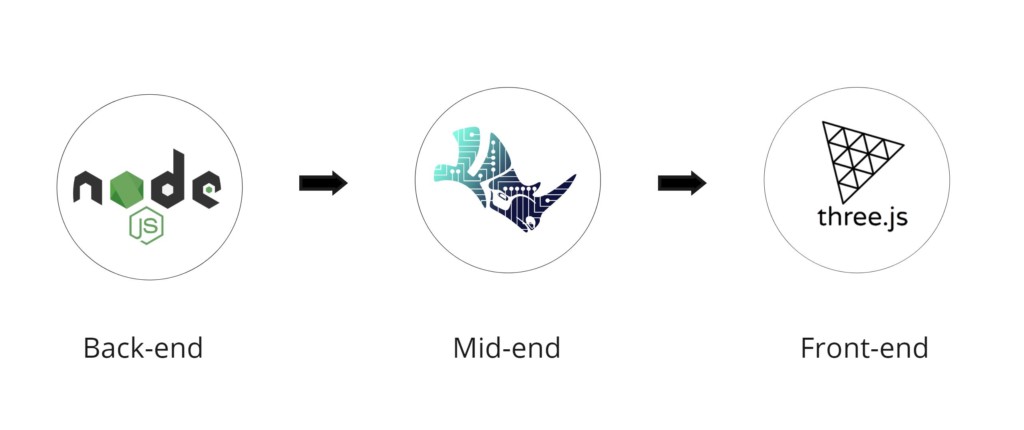

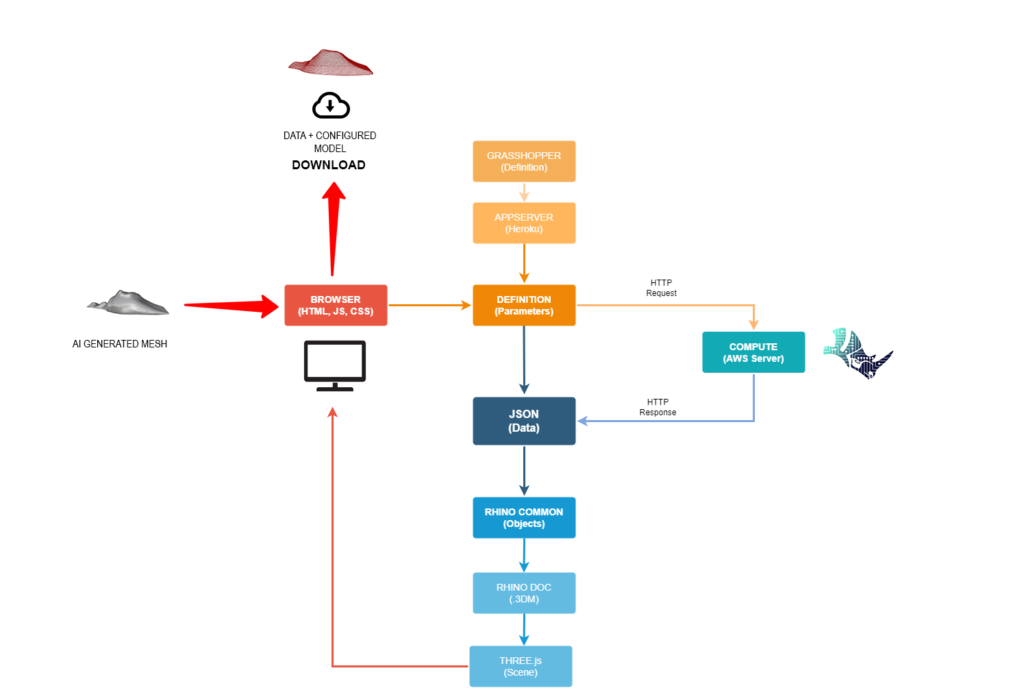

The web app utilises Rhino and grasshopper plugins due its proven ability to rationalise and analyse complex architectural designs. However for this software to be run on a web browser, the use of Rhino.compute is needed. This essentially is a headless version of rhino, which runs grasshopper scripts and provides access to rhino common geometry on the web. Once hosted on a cloud platform, a back-end app server utilising node.js can process requests and front-end libraries such as three.js can render them on the browser.

This diagram elaborates on the process on the previous slide with the libraries, servers and data required to create the web configurator. Currently the AI generated mesh can be uploaded into a web browser and configured (on the far left) and processed with a grasshopper script through the use of Rhino.compute and an app server (far right). The 3D design iterations can also be downloaded for further processing.

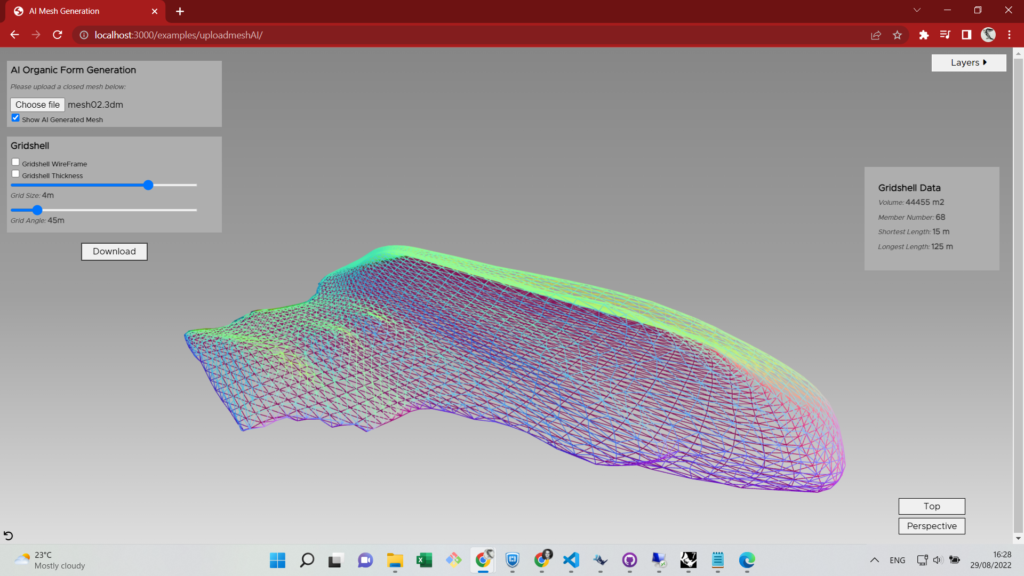

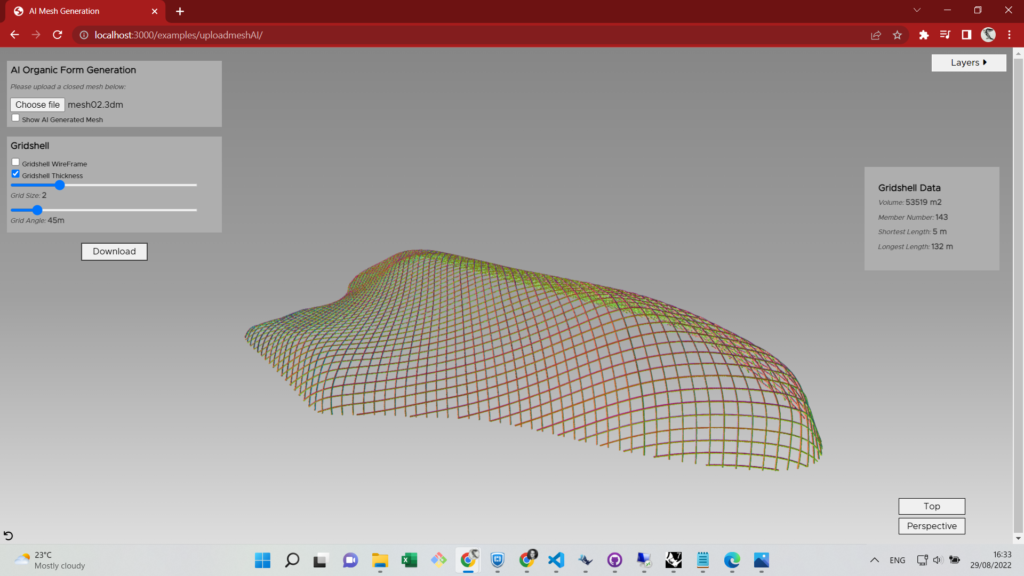

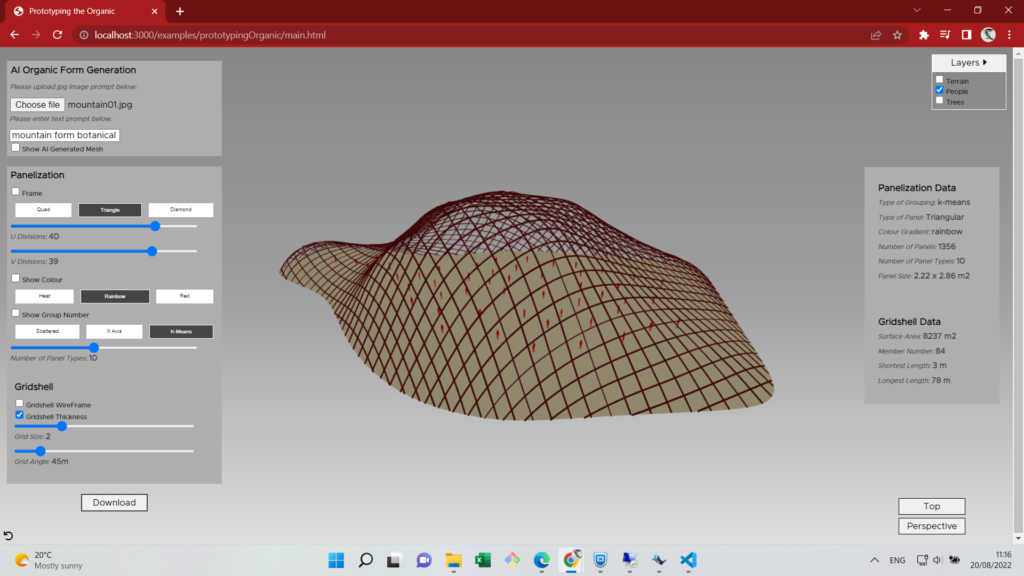

In demonstration for proof of concept, a functioning web UI was created incorporating an AI generated Mesh and design configuration categories. In this basic interface, custom or AI generated meshes can be uploaded manually on the web app to be viewed, rationalised with mesh relaxation and then configured into a gridshell. This gives the user full control of the input mesh to upload.

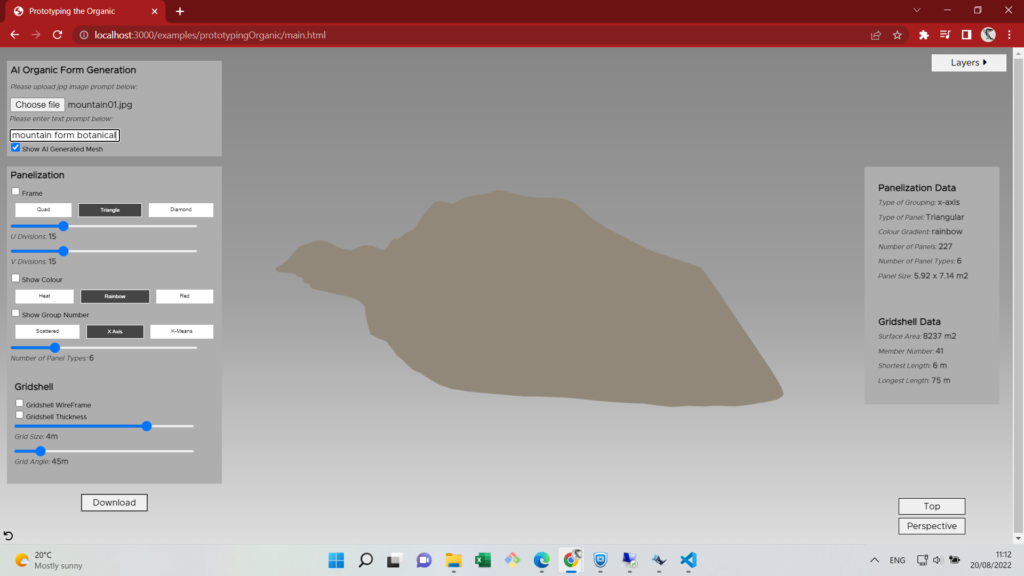

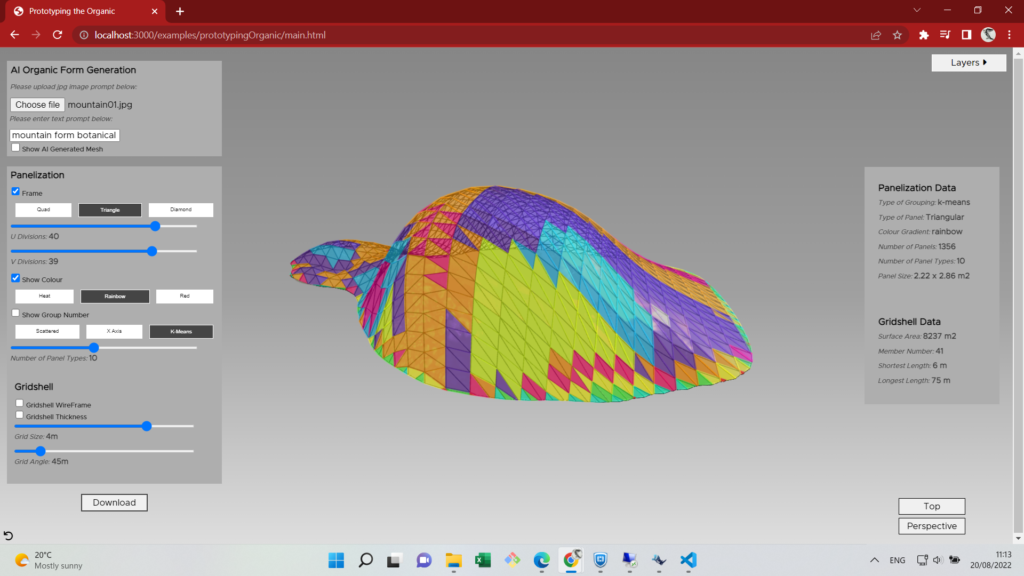

The next web interface further advanced this UI. The interface is separated into three primary sections on the left side menu. The first section in the future will allow for either an input image, text or both to be uploaded as a prompt This is a similar input technique to other applications such as MidJourney, only resulting in a 3D mesh from the inspiration which can be immediately processed. After generating and viewing the mesh, it can then be configured into the architectural design required. Four different meshes generated using the Kaedim algorithms were used as test subjects.

Currently the mesh needs to be uploaded manually however in future development the AI mesh generation will be within the same interface. The next two sections are for either creating a gridshell design or panelizing the form on. Although currently the web interface only provides limited rationalisation options, these could easily be edited in to future to meet a specific niche or design sector. The next two sections are for either creating a gridshell design or panelizing the form on. Although currently the web interface only provides limited rationalisation options, these could easily be edited in to future to meet a specific niche or design sector.

For the panelization option, the user can choose between quad, triangular or diamond panels and their sizing The key feature of this section is the ability to group and count the panels to the user preference. This can be done using the AI algorithm k-means to cluster by similarity of shape or manually through desired amounts. All this data is output beside the 3D visual to show the configured options and sizes.

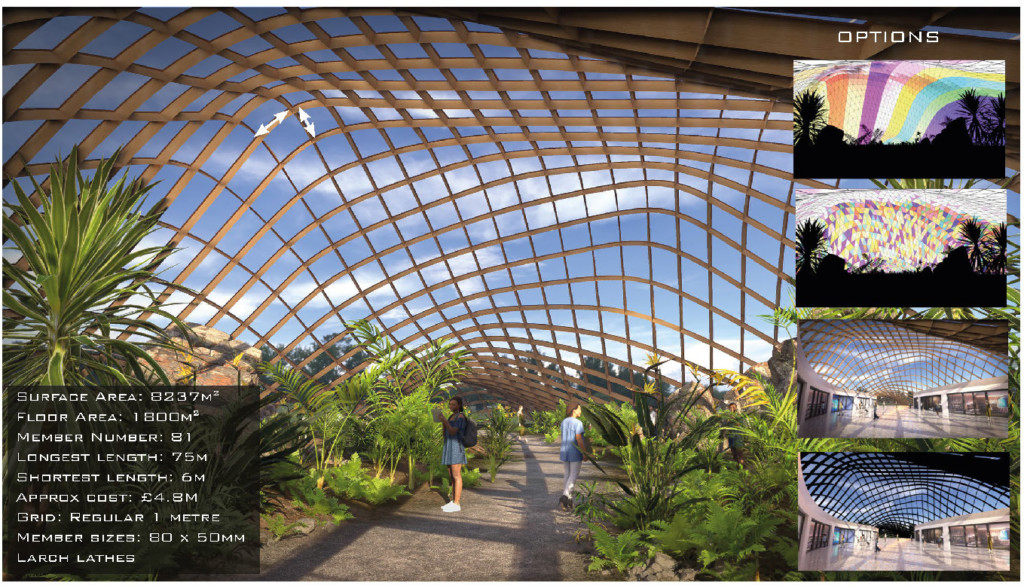

Similarly when the gridshell option is checked, a physics simulation is run to create a efficient form with approximates the original mesh from a grid). This grid can be manipulated in size and rotation. The results can be seen in real-time in the centre of the screen through the use of the three.js library and a Data panel besides this outputs key information such as surface area, longest and shortest members and component count. Additional features include the ability to change views and add layers to provide more context.

The final step is the function to download a fully working 3D model and refine, process or visualise it through other software for more feasibility development. As this application develops it will provide more fabrication information such as materiality and specific constraints to that choice along as customizing other design styles.

FUTURE POTENTIAL: XR and Realtime Technology

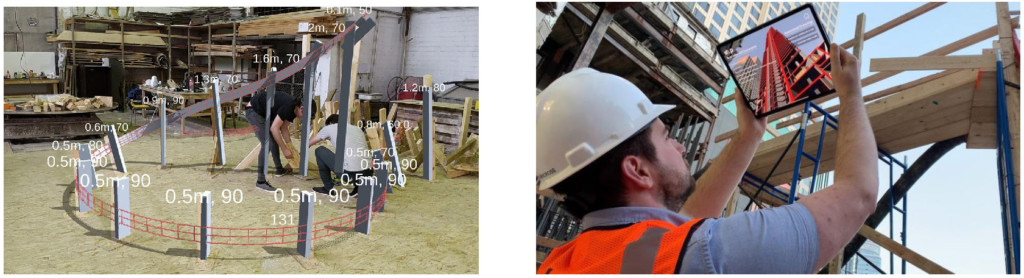

The final parts looks into integrating this workflow into more immersive platforms. As AI algorithms become more advanced and portable technologies such as mixed reality devices develop to run all these processes real-time, users will move from 2D screens and to wearable devices. This integration is another promising technology workflow for many industries. The processes described in the previous chapters have the potential to be delivered within these reality devices to prevent the need for separate platforms and to project concept designs directly into our reality. Examples already over the last few years have been built demonstrating the potential for AR technology in both visualisation and construction, using Geolocation, site and real-time data.

A further step would be to allow the designer using wearable technology and through speech prompts to generate various options that architectural feasible with buildable and cost data. This would ultimately save large amounts of time in early stages while reducing the technical need for the average user particularly with the ability to geolocate. For early design stages, the ability to view the various options on site gives a much more deeper comprehension, cutting down the amount of time to make a decisions.

While This project serves to provoke and demonstrate potential AI workflows for the future there are numerous way to improve or add useful features. This could include:

- Addressing specific design or construction niches

- Various scaling

- Material limitations

- Analysis such as structural and environmental analysis

- Better fabrication understanding

- Live connection with manufacturers for cost estimates and BIM integration

- Various environment simulations

With just the four meshes, dozens of options can be configured rapidly and output both visually with data and 3D model creation. This demonstrates the potential as a rapid prototyping and design tools for early design stages . Ultimately the use of these technologies will act only as tools in the design process. If they can provide easy and rapid feasibility studies as an alternative to slower, tradition form finding and rationalisation methods then it can be a welcome addition to the designers toolset.

Prototyping the Organic is a project of IAAC, Institute for Advanced Architecture of Catalonia developed in the Master of Advanced Computation in Architecture and Design 2021/22

Student: Michal Gryko Thesis Advisor : David Andres Leon