ROBO-swarm

Introduction

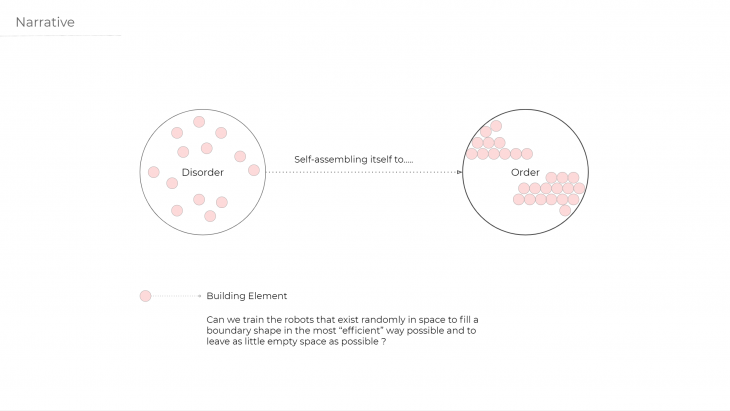

Inspired by the definition of self-assembly, which is a process in which a disordered system of pre-existing components forms an organized structure as a consequence of specific, local interactions among the components themselves, without external direction, we sought to train little robots on how to self-organize within a bound space.

Our concept was inspired by the movie “Big Hero 6”.

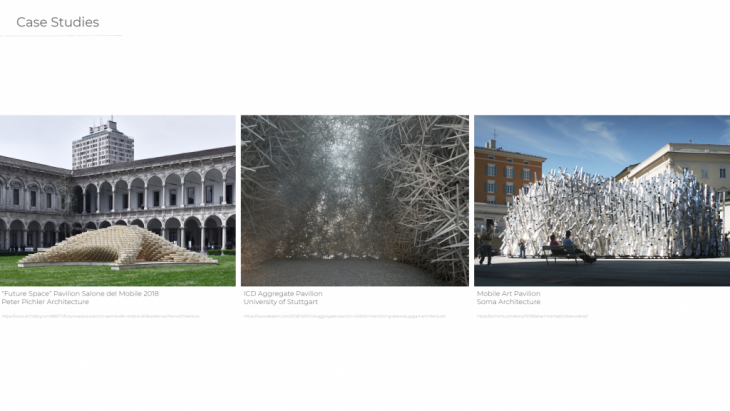

In architecture, the idea of designing by aggregating components gives a variety of intriguing results. Could the components be agents that self-assemble to create such structures?

Narrative

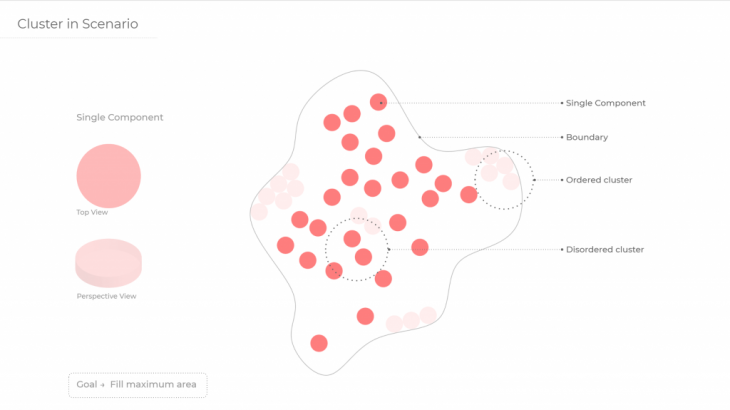

We consider a number of micro-bots inside a closed boundary in random position. The goal is to find the best way to assemble and organize by filling the maximum area.

Method

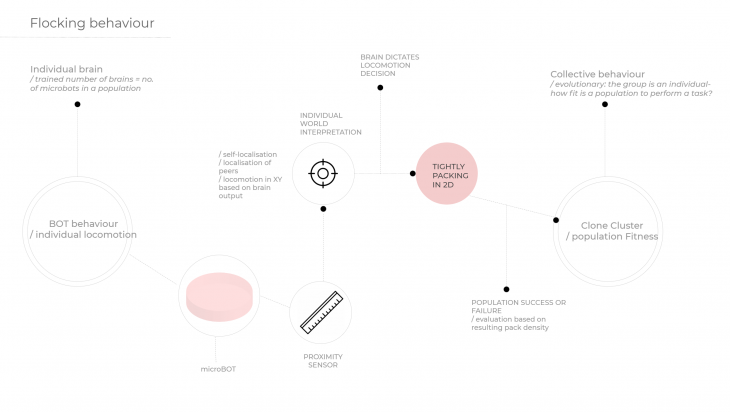

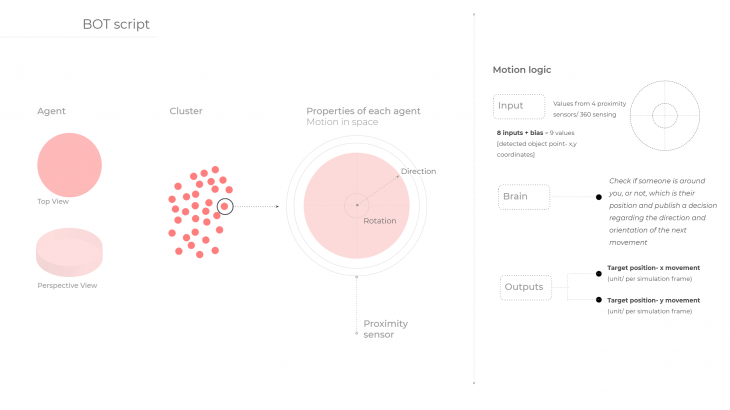

The chosen component for this exercise is a flat disk, devoid of motor-locutor abilities, but instead animated in Coppelia via move commands, in order to maintain the lightness of the machine learning training process, as well as to maintain the focus of the experiment around generating the group behaviour, rather than on motor functionality. Therefore, the individual robot is only equipped with a proximity sensor, limiting the bots view of the world to:

> localise itself in rapport to other members of the flock

> localise other members in rapport to the current position

Based on this limited world view that each individual bot posseses, the brain dictates a locomotion decision, enabled on the XY axes.

Each microbot has an individual brain- therefore, for the simulation, the number of brains trained coincides with the number of bots constituting the swarm. The micro-bots are clones of eachother, tasked to tightly pack within a 2-dimensional boundary. The evaluation is however performed at the swarm level- the group is this time treated as an individual, assessed of how fit it is performing the task at hand.

Training the swarm:

The experiment is ran with 4 microbots, each disposing of a proximity sensor. Hence, the input to the neural network is constituted by 9 values:

> the x values of the proximity sensor readings (4)

> bias value (1)

These inputs are used to train the brain function: the brain is supposed to check if there is someone around, which is their position, and in return to publish a decision regarding:

> the direction

> the orientation

Towards which the individual microbot is supposed to travel towards.

The outputs of the neural network are therefore:

> target position: x movement

> target position: y movement

> the z values of the proximity sensor readings (4)

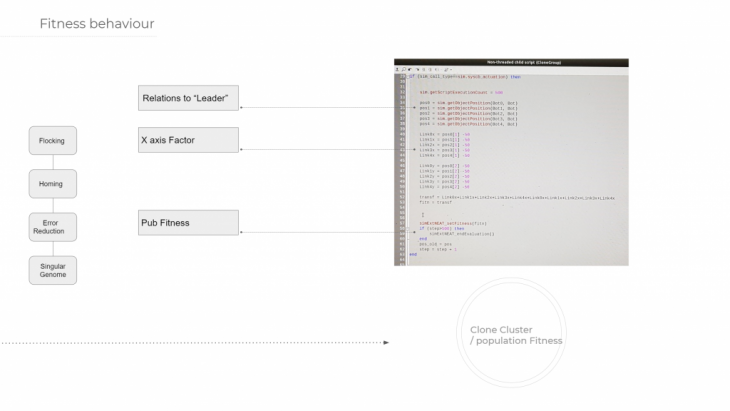

This part of the parent script details the evolutionary relations of the swarm. The initial phrase details that after a full generation of the swarm interacting after 500 (seconds) the network uses this as the initial genome. From this genome it records with the sim.getObjectPosition command from all the swarm to a “leader” swarm member or “bot”.

From this the fitness is defined by the X axis and Y axis position deconstructed every fifty seconds of the simulation. The fitness is then the addition of all of these in order to get a singular fitness function for the genome over multiple generations. Finally there is a publishing of the fitness function in order to evolve.

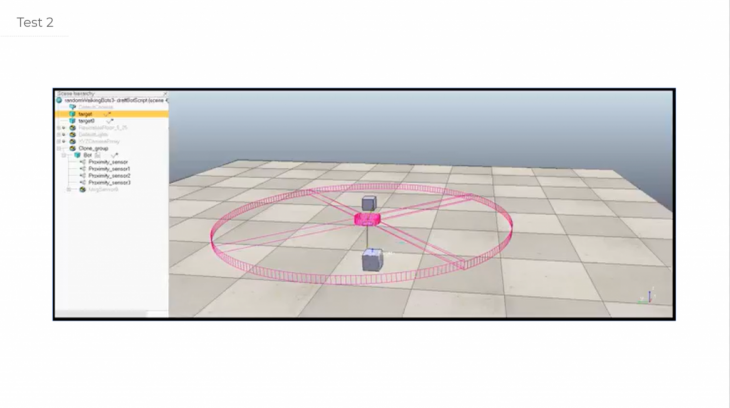

Tests

The initial run of a singular leader bot that has no other actors in the swarm to respond to and train against it was also given a circular searching path in order to increase the amount of positional data to train with in order to stop the neuro-evolution defaulting to train the swarm to remain stationary.

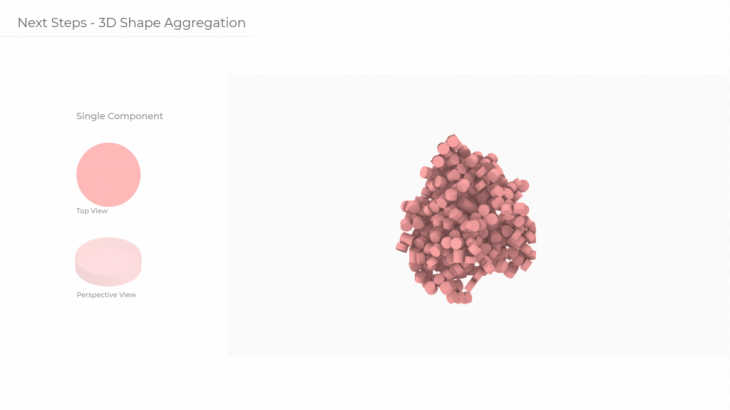

Next Steps

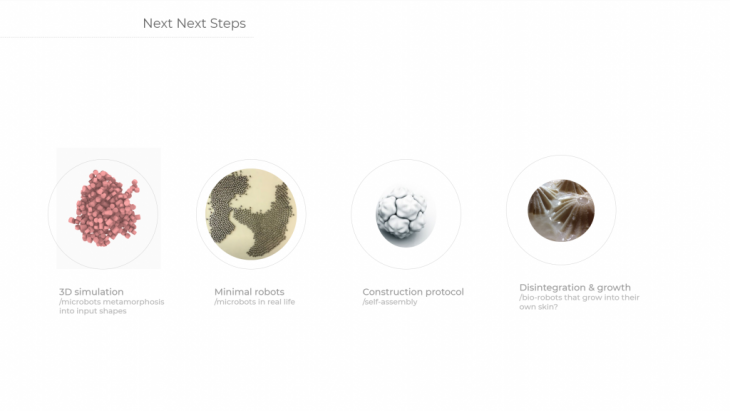

In the future, the goal is to train a swarm with a higher population of individuals and test them against the task of aggregating in 3-dimensional space.

ROBO-swarm is a project of IaaC, Institute for Advanced Architecture of Catalonia, developed at Master in Robotics and Advanced Construction in 2019-2020

Students: Andreea Bunica, Jun Woo Lee, Gjeorgjia Lilo, Abdullah Sheikh

Faculty: Raimund Krenmueller, Soroush Garivani