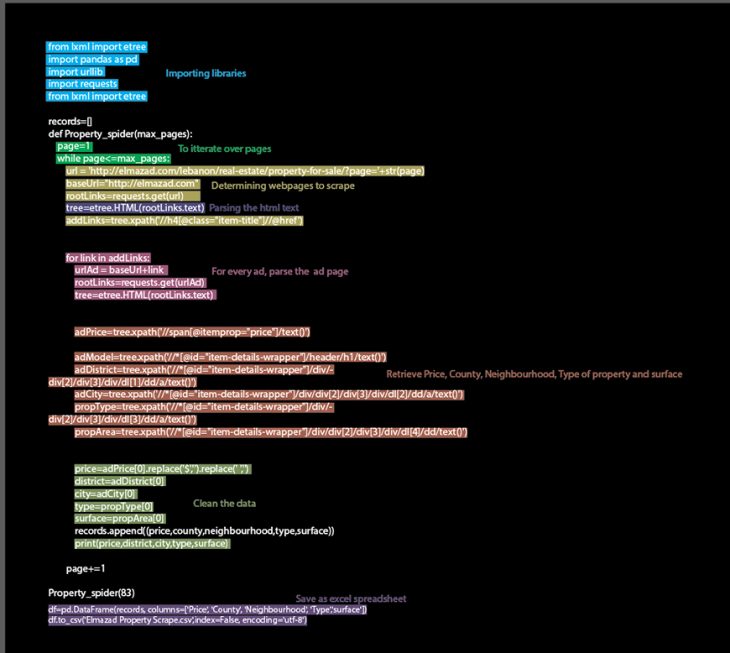

Using python, one of the most popular coding languages available, we were tasked with creating a ‘scraper’; an algorithm, responsible for automating the task of extracting data from websites with large databases. So instead of spending a large amount of time copying and pasting that data, one can run the code and wait for it to finish its job, simulating a human-computer interaction.

Elmazad is a Lebanese E-commerce website, a little like craigslist, one can find cars or animals for sale. The ‘property’ category is particularly interesting in a country which has seen its real estate market fluctuate along the years, mostly due to internal and external political turmoil.

Once run, the code will access the desired webpage, extract the desired information, in our case property price, surface, type and location, and store it in an excel sheet for later study.

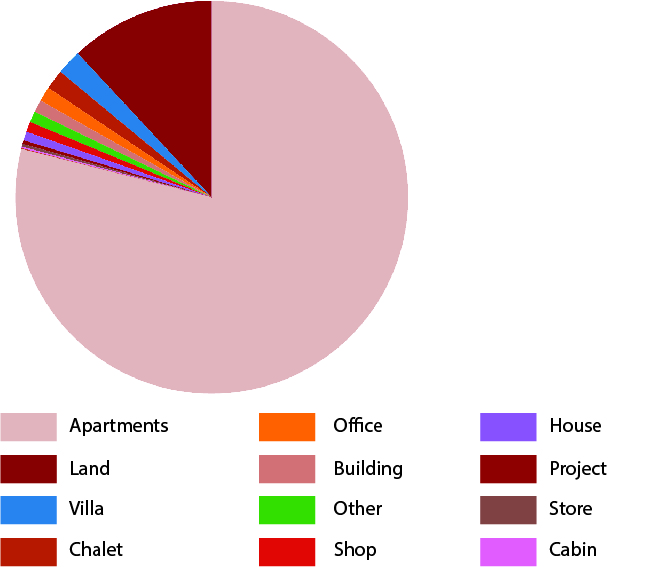

After runing the code for 30 minutes, we were able to extract information for one thousand six hundred posts.

After manipulating the data using the Pandas for python library, we can first see that ‘apartments’ is the most common type of real estate on the market.

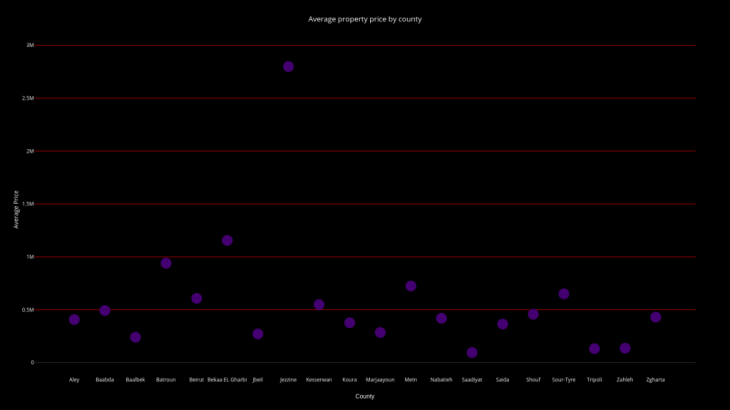

By tracing the data over a scatter plot, we can first see outlying entries, and later correct the data. But also what is the average price of properties in each county.

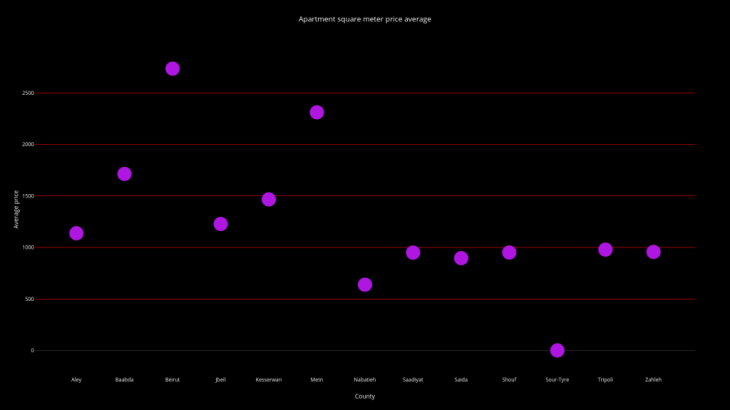

By applying the same logic, we can also see which county’s land is most valuable.

Beirut ranks first, which is normal since it’s the capital of the city. The Metn county comes in at second place for its a prime residential area, close to Beirut yet far away from turmoil compared to places like Tyre, or Zahleh which are close to the Israeli and Syrian border respectively.

Scraping properties is a project of IAAC, Institute for Advanced Architecture of Catalonia, developed at MaCT (Master in City & Technology), 2017-18 by:

Students: Camille Feghali

Faculty: Andre Resende