Sparse Selection // S.2 SOFTWARE

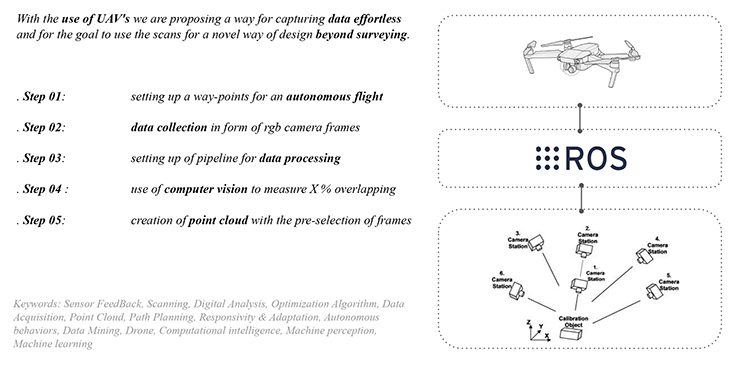

The use of 3D-Scanning is already common practice on the construction sites of today. The Project SPARSE SELECTION aims to create a process pipeline to optimize the creation of point-clouds with the more accessible use of photogrammetry. The intention is to be able to capture data of areas with limited access, which is even a difficult task for grounded 3D-Scanners. With the use of UAVs, we are proposing a way for capturing data effortlessly and for the goal to use the scans beyond surveying.

Overview

During the industrial revolution in the 19th century, prefabrication within the construction sector has massively led to the increase of construction speed and the reduction of construction costs. Nowadays most projects would not be affordable without the use of prefabricated components, the use of standardized formats, lost-formwork or pre-assembled facade panels, and technical components. Besides the comfort and simplicity that is brought by using standardized components, the main driving force to reduce custom parts is to reduce the amount of traditional labor on site.

Digitization and new technologies give us the opportunity to bring back some architectural qualities, which are seen as not affordable if done by human labor alone. To rediscover form, pattern, and complex topologies in architecture and heritage, we propose the use of photogrammetry beyond surveying to create a library as a first step of the development of thinkable and unthinkable combinations and maybe a novel architectural language. The digitization of the construction sector is not only about reducing costs, it is the chance to rediscover “lost craftsmanship“.

We are proposing the use of photogrammetry as an affordable solution with the help of a pipelined process to digitize existing structures with a focus on the existing urban realm and especially the mapping of vertical facade features.

Process Pipeline

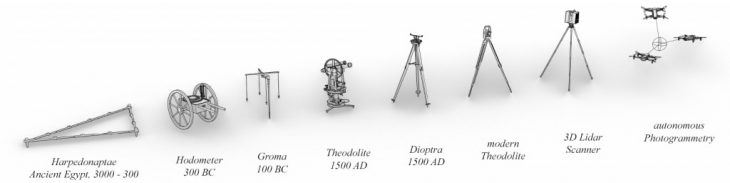

Brief history of Surveying

Starting from the ancient “rod-stretcher” from Egypt based on the Pythagorean theorem, over the first Hodometer to measure lengths, surveying is an integral part of creating architecture. The development of the latest 3D-Scanners are reaching a precision up to 0-1 mm in tolerance. Nevertheless, 3D-Scanning is getting more and more included in today’s construction processes, it is still a time-consuming task including expensive hardware. Whereas photogrammetry as a tool can reach a precision of put to 10-15 mm, which is still excellent for a number of use cases. Combined with the latest developments and dropping prices on the markets for drones enables the use of UAV’s for autonomous flights for effortless data-capturing.

Evolution of Surveying and Scanning

Process Pipeline

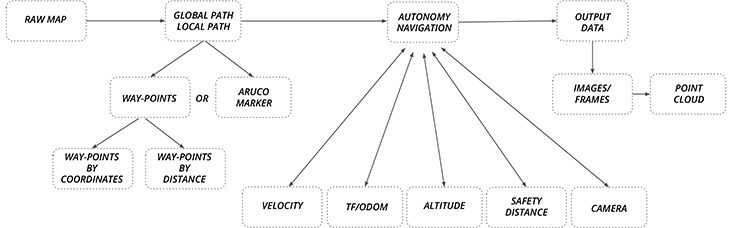

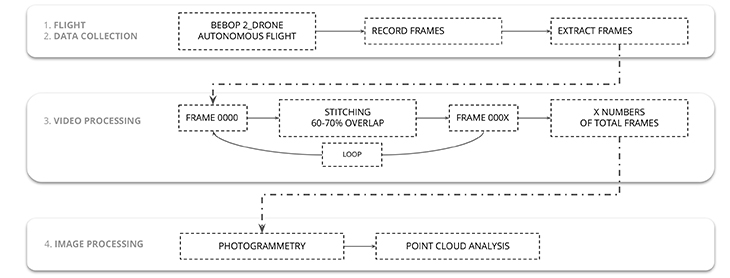

We are proposing to streamline the process of capturing only the necessary data for an optimized point cloud. The aim is to create flight-paths for an autonomous flight of the drone with a focus on the mapping of vertical elements, mainly facades. The flightpath is based on a raw map or estimation of the area or the surface which is to capture. The autonomous flight of the drone is controlled by the Robot Operating System (ROS) and the necessary drivers for the drone. The idea is to check the image overlap of the drone’s onboard camera in real time and to only save the frames with the perfect overlap for the point-cloud creation (less, but optimized images for faster point-clout calculation) which will be generated in a post-process.

Process Overview

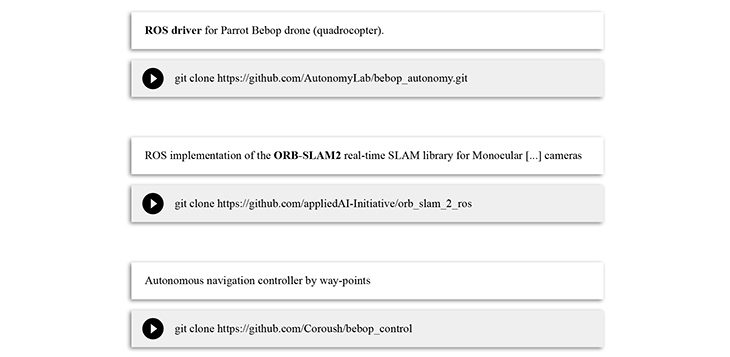

Drone Controller

The autonomous flight is achieved with a set of open-source software libraries and tools within the ROS framework. The following repositories were investigated during this project:

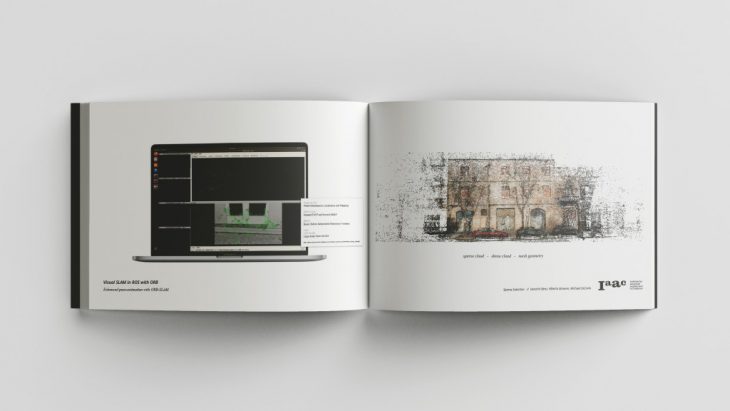

For enhanced pose-estimation and more precise control of the drone, methods for visual simultaneous localization and mapping (Visual SLAM) were investigated. The use of ORB-SLAM in this project was finally only passive, as the feature detection of the image segmentation in the post-process is based on a similar approach.

Enhanced pose-estimation with ORB-SLAM

The path in this example is created by global Way-Points by X, Y, Z, and Yaw with the drones start-position as the origin and the radians for Yaw compared to true north.

Proof of Concept – Autonomous Flight

As a final proof of concept, the navigation of the drone along a simply pre-calculated flight-path inside the drone cage of the IAAC Atelier facilities was successful. To calculate the necessary way-points for the autonomous flights in front of any chosen facade, a clean parametric script was written within Rhino’s Grasshopper.

Way-Points created from raw map

With the use of open-source 3D-Data (Cadmapper.com) a first raw geometry is analyzed and a flight path is created. The Grasshopper script to generate the Way-Point-File for the Bebop-Controller is available in our GitHub Repository here.

The output of the Grasshopper script is a .txt file which can be used as a direct replacement for the Way-Points.py file of the Bebop-Controller.

Point Cloud Capturing Simulation

Image Capturing

The idea is to check the image overlap of each frame that is captured from the drone’s onboard camera and check each of those with the following for the perfect overlap to have only the necessary amount of data for the final point-cloud which will be generated in a post-process.

Possible Connections of Feedback-Loop

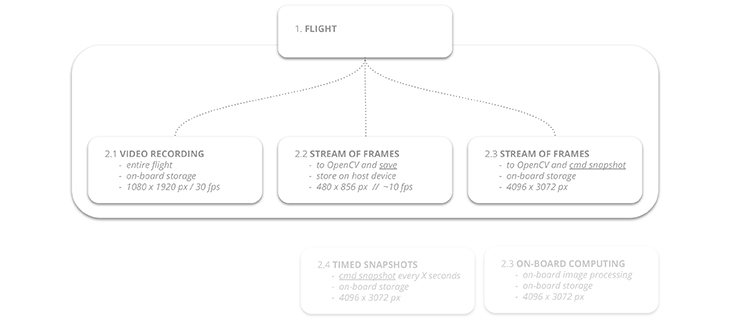

Multiple options of “Image Selection” were investigated. From 1. having the autonomous flight capturing the entire video and extracting the frames in the post-process, over 2. having a live-connections between OpenCV and the drone’s Wi-Fi-streamed images in real-time, up to 3. having a kind 0f “Feedback-Loop” where OpenCV sends a “/snapshot” -command to the drone to take a high-resolution image and save it to the drone’s on-board storage.

OpenCV Image Selection

To test the prepared OpenCV script, the decision was made to keep the image selection as a post-process first. The Images of an entire flight was extracted as single video-frames from a Rosbag.

Final Process Pipeline with Post-Processing of Images

Post-Process: Image Selection

Data storage becomes more and more excessive. To keep the data for the point cloud creation as low as possible, our script tries to isolate only the necessary and high-quality images instead of an unregulated amount of data.

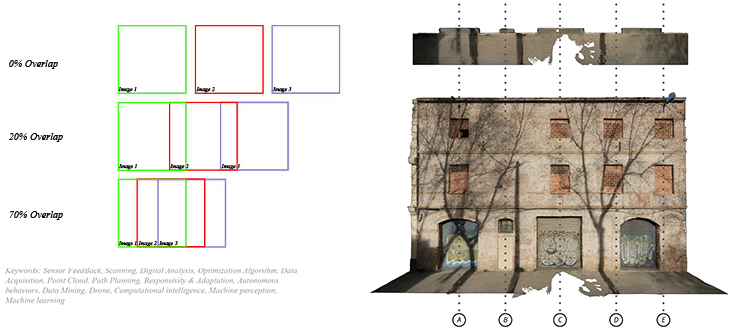

For point cloud creation it is recommended to have at least 60 – 70% image overlap. To make sure to store only the image matching this overlap a python script was created which measures the overlap by feature-detection and stitching of frames within an array. The process is similar to the creation of stitching panoramic views from multiple images and based on OpenCV, an open-source Python library.

Point-Cloud Creation

Finally, the extracted images can be used to finally generate the point cloud. By extracting only the necessary images, the process of point-cloud creation can be more efficient due to the fact of not using a vast amount of images which maybe cannot be calculated, or eventually, increase the time to calculate.

Sparse Cloud – Dense Cloud – Mesh Model

The overlap of 60 – 70% is an often recommended target number. But when using the python script this number can easily be edited to individual needs. The benefits of using a drone to capture data for point cloud creation are obvious as they reach areas with difficult access. Having most of the used tools available as open-source makes it easily accessible and affordable.

Overlap and final Point Cloud

Final Presentation

Find the entire final presentation in Google Slides here:

Credits // ROS-Repository Links

- ROS driver for Parrot Bebop drone (quadrocopter):

git clonehttps://github.com/AutonomyLab/bebop_autonomy.git

- ROS implementation of the ORB-SLAM2 real-time SLAM library for Monocular […] cameras:

git clonehttps://github.com/appliedAI-Initiative/orb_slam_2_ros

- Autonomous navigation Bebop-Controller by Way-Points:

git clonehttps://github.com/MRAC-IAAC/bebop_control

- Sparse Selection Github Repository:

git clonehttps://github.com/MRAC-IAAC/bebop_control

Sparse Selection // S.2 is a project of IAAC, Institute for Advanced Architecture of Catalonia

developed at the Master in Robotics and Advanced Construction Software II Seminar in 2020/2021 by:

Students: Hendrik Benz, Alberto Browne, Michael DiCarlo

Faculty: Carlos Rizzo

Faculty Assistant: Soroush Garivani